Performance metrics

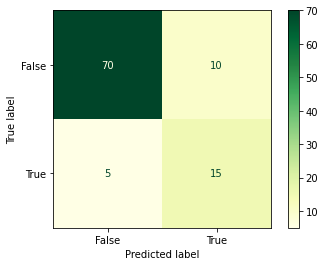

Confusion matrix

Note that the majority class is “No BBQ”.

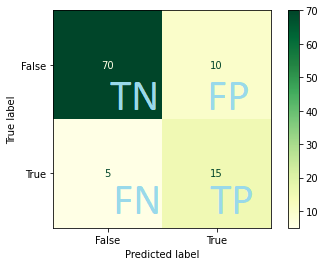

TP TN FP FN

- TN: True Negative

- FN: False Negative

- FP: False Positive

- TP: True Positive

Accuracy

Accuracy is the rate of correct predictions: \[acc = (TP+TN) / (TP+TN+FN+FP)\]

In our case: \[acc = (70 + 15) / 100 = 0.85\]

Note that this score gets skewed by the majority class!

Precision & recall

From Positive perspective:

- Precision: How many of the predicted BBQ days can we truly fire our BBQ?

- Recall: How many of the true BBQ days were predicted by the model?

\[precision = TP / (TP + FP) = 15 / (15+10) = 0.6\] \[recall = TP / (TP + FN) = 15 / (15+5) = 0.75\]

f1

Often you want both precision and recall to be high.

We can calculate the f1 score: \[f1 = 2pr / (p + r)\] where p is precision and r is recall

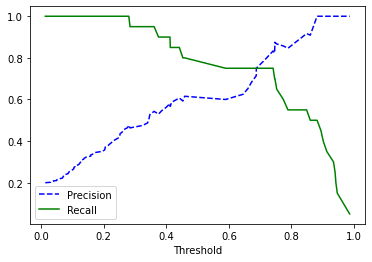

Trade-off Precision/recall

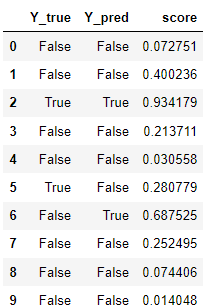

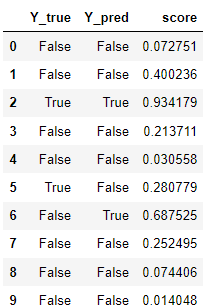

For most models, we do not just get the predicted class, but also an associated score.

If the score is above a certain threshold, it assigns that class.

Exercise

What do you think happens with the precision and recall when we increase or decrease the threshold?

Solution

- increase the threshold, we get more strict: recall drops, precision may improve (if our model does well).

- decrease the threshold, recall may increase but precision could drop.