Section 1: Setting Up Environment For Collaborative Code Development

Overview

Teaching: 10 min

Exercises: 0 minQuestions

What tools are needed to collaborate on code development effectively?

Objectives

Provide an overview of all the different tools that will be used in this course.

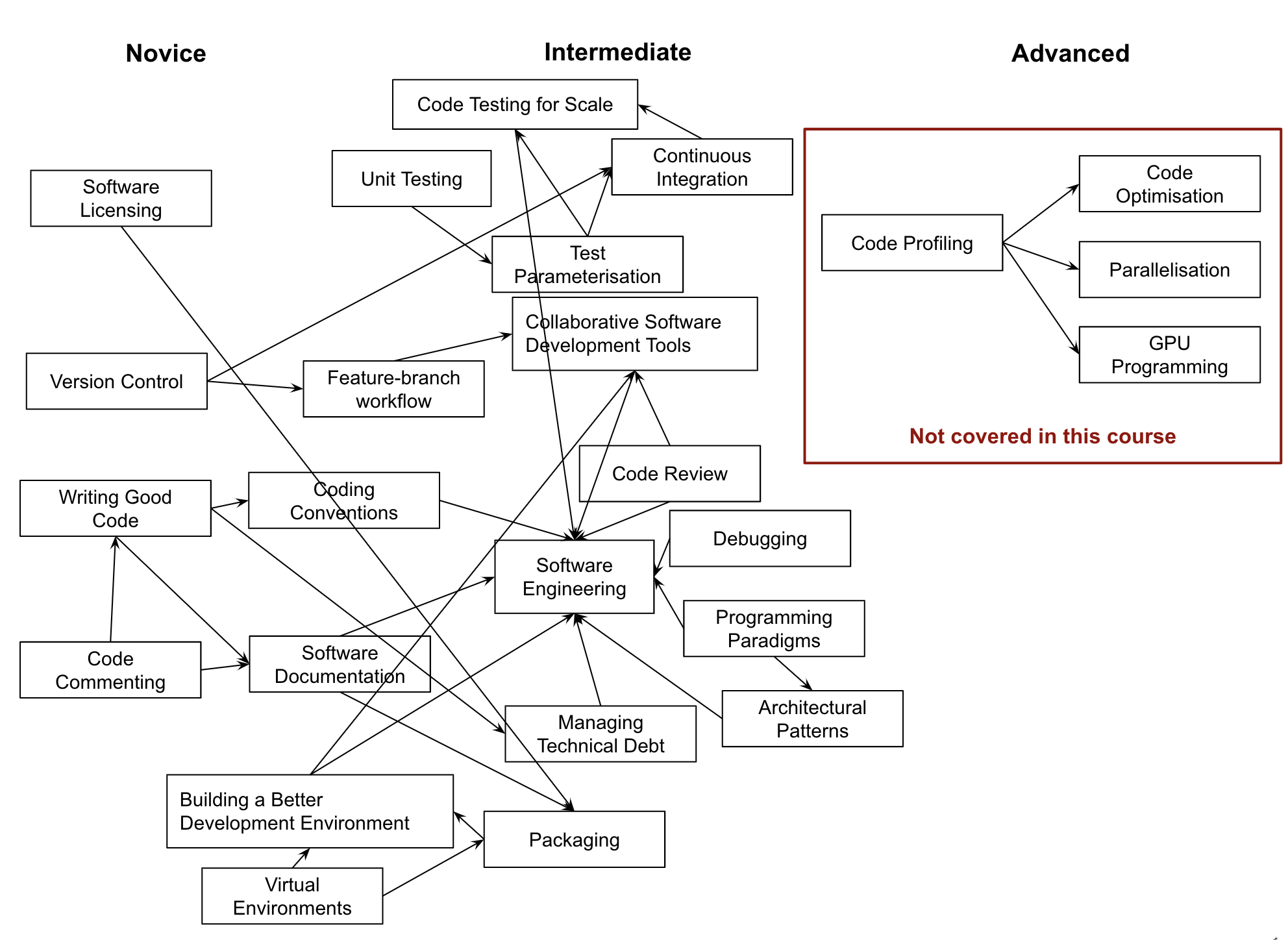

The first section of the course is dedicated to setting up your environment for collaborative software development. In order to build working (research) software efficiently and to do it in collaboration with others rather than in isolation, you will have to get comfortable with using a number of different tools interchangeably as they’ll make your life a lot easier. There are many options when it comes to deciding which software development tools to use for your daily tasks - we will use a few of them in this course that we believe make a difference. There are sometimes multiple tools for the job - we select one to use but mention alternatives too. As you get more comfortable with different tools and their alternatives, you will select the one that is right for you based on your personal preferences or based on what your collaborators are using.

Here is an overview of the tools we will be using.

Command Line & Python Virtual Development Environment

We will use the command line (also known as the command line shell/prompt/console) to run our Python code and interact with the version control tool Git and software sharing platform BitBucket.

We expect that you know how to use a virtual environment, we recommend

venv and pip

to set up a Python virtual development environment and isolate our software project

from other Python projects we may work on.

Note: some Windows users experience the issue where Python hangs from Git Bash (i.e.

typing python causes it to just hang with no error message or output) -

see the solution to this issue.

Integrated Development Environment (IDE)

An IDE integrates a number of tools that we need to develop a software project that goes beyond a single script - including a smart code editor, a code compiler/interpreter, a debugger, etc. It will help you write well-formatted & readable code that conforms to code style guides (such as PEP8 for Python) more efficiently by giving relevant and intelligent suggestions for code completion and refactoring. IDEs often integrate command line console and version control tools - we teach them separately in this course as this knowledge can be ported to other programming languages and command line tools you may use in the future (but is applicable to the integrated versions too).

For this course, you are free to choose the IDE of your choice. We recommend using PyCharm in this course - a free, open source IDE.

Git & BitBucket

Git is a free and open source distributed version control system designed to save every change made to a (software) project, allowing others to collaborate and contribute. In this course, we use Git to version control our code in conjunction with BitBucket for code backup and sharing. BitBucket is one of the integrated products and social platforms for modern software development, monitoring and management - it will help us with version control, issue management, code review, code testing/Continuous Integration, and collaborative development.

Let’s get started with setting up our software development environment!

Key Points

In order to develop (write, test, debug, backup) code efficiently, you need to use a number of different tools.

When there is a choice of tools for a task you will have to decide which tool is right for you, which may be a matter of personal preference or what the team or community you belong to is using.

Introduction to Our Software Project

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What is the design architecture of our example software project?

Why is splitting code into smaller functional units (modules) good when designing software?

Objectives

Use Git to obtain a working copy of our software project from Bitbucket.

Inspect the structure and architecture of our software project.

Understand Model-View-Controller (MVC) architecture in software design and its use in our project.

Patient Inflammation Study Project

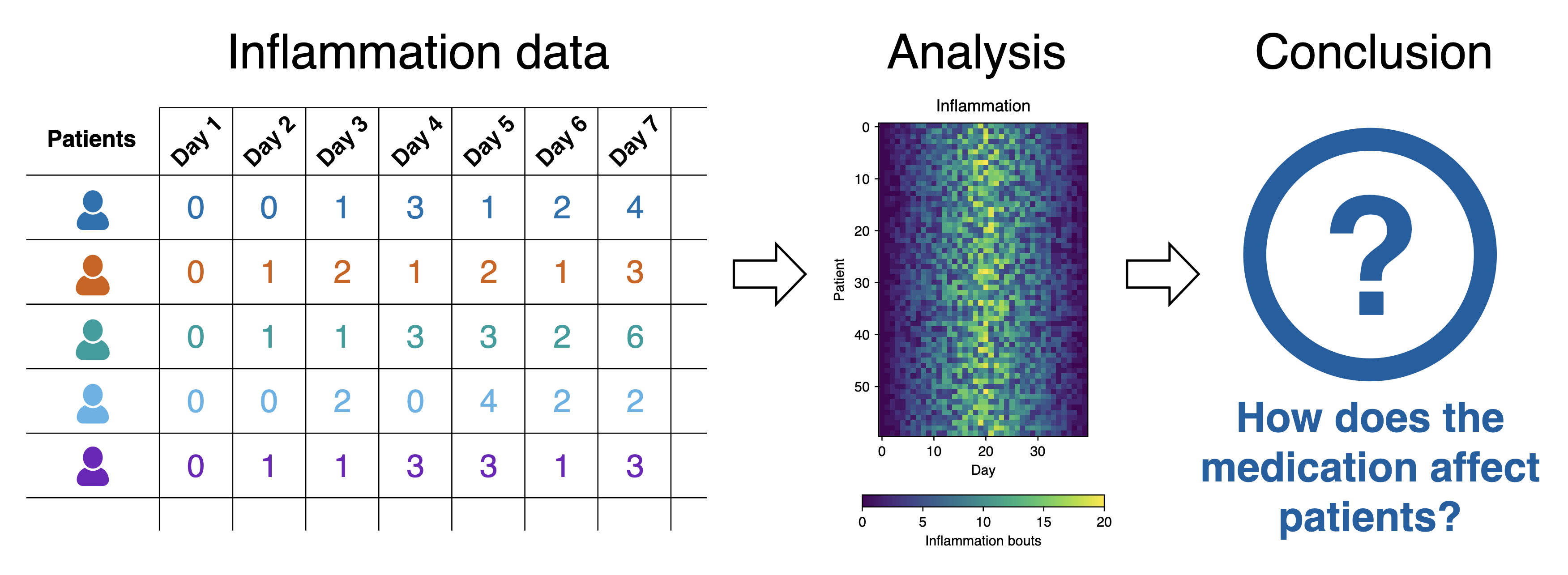

So, you have joined a software development team that has been working on the patient inflammation study project developed in Python and stored on GitHub. The project analyses the data to study the effect of a new treatment for arthritis by analysing the inflammation levels in patients who have been given this treatment. It reuses the inflammation datasets from the Software Carpentry Python novice lesson.

Inflammation study pipeline from the Software Carpentry Python novice lesson

What Does Patient Inflammation Data Contain?

Each dataset records inflammation measurements from a separate clinical trial of the drug, and each dataset contains information for 60 patients, who had their inflammation levels recorded for 40 days whilst participating in the trial (a snapshot of one of the data files is shown in diagram above).

Each of the data files uses the popular comma-separated (CSV) format to represent the data, where:

- Each row holds inflammation measurements for a single patient,

- Each column represents a successive day in the trial,

- Each cell represents an inflammation reading on a given day for a patient (in some arbitrary units of inflammation measurement).

The project is not finished and contains some errors. You will be working on your own and in collaboration with others to fix and build on top of the existing code during the course.

Downloading Our Software Project

To start working on the project, you will first create a fork of the software project repository from Bitbucket within your own Bitbucket account and then obtain a local copy of that project (from your Bitbucket) on your machine. If you have not forked this repository yet: https://bitbucket.org/svenvanderburg1/python-intermediate-inflammation/src/main/ please do so now!

Exercise: Obtain the Software Project Locally

Using the command line, clone the copied repository from your Bitbucket account into the home directory on your computer using SSH. Which command(s) would you use to get a detailed list of contents of the directory you have just cloned?

Solution

- Find the SSH URL of the software project repository to clone from your Bitbucket account. Make sure you do not clone the original template repository but rather your own copy, as you should be able to push commits to it later on. Also make sure you select the SSH tab and not the HTTPS one - you’ll be able to clone with HTTPS, but not to send your changes back to Bitbucket!

- Make sure you are located in your home directory in the command line with:

$ cd ~- From your home directory in the command line, do:

$ git clone <YOUR_REPO_URL>Make sure you are cloning your copy of the software project and not the template repository.

- Navigate into the cloned repository folder in your command line with:

$ cd python-intermediate-inflammationNote: If you have accidentally copied the HTTPS URL of your repository instead of the SSH one, you can easily fix that from your project folder in the command line with:

$ git remote set-url origin <YOUR_REPO_URL>

Our Software Project Structure

Let’s inspect the content of the software project from the command line. From the root directory of the project, you can

use the command ls -l to get a more detailed list of the contents. You should see something similar to the following.

$ cd ~/python-intermediate-inflammation

$ ls -l

total 24

-rw-r--r-- 1 carpentry users 1055 20 Apr 15:41 README.md

drwxr-xr-x 18 carpentry users 576 20 Apr 15:41 data

drwxr-xr-x 5 carpentry users 160 20 Apr 15:41 inflammation

-rw-r--r-- 1 carpentry users 1122 20 Apr 15:41 inflammation-analysis.py

drwxr-xr-x 4 carpentry users 128 20 Apr 15:41 tests

As can be seen from the above, our software project contains the README file (that typically describes the project,

its usage, installation, authors and how to contribute), Python script inflammation-analysis.py,

and three directories - inflammation, data and tests.

The Python script inflammation-analysis.py provides the main

entry point in the application, and on closer inspection, we can see that the inflammation directory contains two more Python scripts -

views.py and models.py. We will have a more detailed look into these shortly.

$ ls -l inflammation

total 24

-rw-r--r-- 1 alex staff 71 29 Jun 09:59 __init__.py

-rw-r--r-- 1 alex staff 838 29 Jun 09:59 models.py

-rw-r--r-- 1 alex staff 649 25 Jun 13:13 views.py

Directory data contains several files with patients’ daily inflammation information (along with some other files):

$ ls -l data

total 264

-rw-r--r-- 1 alex staff 5365 25 Jun 13:13 inflammation-01.csv

-rw-r--r-- 1 alex staff 5314 25 Jun 13:13 inflammation-02.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-03.csv

-rw-r--r-- 1 alex staff 5367 25 Jun 13:13 inflammation-04.csv

-rw-r--r-- 1 alex staff 5345 25 Jun 13:13 inflammation-05.csv

-rw-r--r-- 1 alex staff 5330 25 Jun 13:13 inflammation-06.csv

-rw-r--r-- 1 alex staff 5342 25 Jun 13:13 inflammation-07.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-08.csv

-rw-r--r-- 1 alex staff 5327 25 Jun 13:13 inflammation-09.csv

-rw-r--r-- 1 alex staff 5342 25 Jun 13:13 inflammation-10.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-11.csv

-rw-r--r-- 1 alex staff 5340 25 Jun 13:13 inflammation-12.csv

-rw-r--r-- 1 alex staff 22554 25 Jun 13:13 python-novice-inflammation-data.zip

-rw-r--r-- 1 alex staff 12 25 Jun 13:13 small-01.csv

-rw-r--r-- 1 alex staff 15 25 Jun 13:13 small-02.csv

-rw-r--r-- 1 alex staff 12 25 Jun 13:13 small-03.csv

As previously mentioned, each of the inflammation data files contains separate trial data for 60 patients over 40 days.

Exercise: Have a Peek at the Data

Which command(s) would you use to list the contents or a first few lines of

data/inflammation-01.csvfile?Solution

- To list the entire content of a file from the project root do:

cat data/inflammation-01.csv.- To list the first 5 lines of a file from the project root do:

head -n 5 data/inflammation-01.csv.0,0,1,3,2,3,6,4,5,7,2,4,11,11,3,8,8,16,5,13,16,5,8,8,6,9,10,10,9,3,3,5,3,5,4,5,3,3,0,1 0,1,1,2,2,5,1,7,4,2,5,5,4,6,6,4,16,11,14,16,14,14,8,17,4,14,13,7,6,3,7,7,5,6,3,4,2,2,1,1 0,1,1,1,4,1,6,4,6,3,6,5,6,4,14,13,13,9,12,19,9,10,15,10,9,10,10,7,5,6,8,6,6,4,3,5,2,1,1,1 0,0,0,1,4,5,6,3,8,7,9,10,8,6,5,12,15,5,10,5,8,13,18,17,14,9,13,4,10,11,10,8,8,6,5,5,2,0,2,0 0,0,1,0,3,2,5,4,8,2,9,3,3,10,12,9,14,11,13,8,6,18,11,9,13,11,8,5,5,2,8,5,3,5,4,1,3,1,1,0

Directory tests contains several tests that have been implemented already. We will be adding more tests

during the course as our code grows.

An important thing to note here is that the structure of the project is not arbitrary. One of the big differences between novice and intermediate software development is planning the structure of your code. This structure includes software components and behavioural interactions between them (including how these components are laid out in a directory and file structure). A novice will often make up the structure of their code as they go along. However, for more advanced software development, we need to plan this structure - called a software architecture - beforehand.

Let’s have a more detailed look into what a software architecture is and which architecture is used by our software project before we start adding more code to it.

Software Architecture

A software architecture is the fundamental structure of a software system that is decided at the beginning of project development based on its requirements and cannot be changed that easily once implemented. It refers to a “bigger picture” of a software system that describes high-level components (modules) of the system and how they interact.

In software design and development, large systems or programs are often decomposed into a set of smaller

modules each with a subset of functionality. Typical examples of modules in programming are software libraries;

some software libraries, such as numpy and matplotlib in Python, are bigger modules that contain several

smaller sub-modules. Another example of modules are classes in object-oriented programming languages.

Programming Modules and Interfaces

Although modules are self-contained and independent elements to a large extent (they can depend on other modules), there are well-defined ways of how they interact with one another. These rules of interaction are called programming interfaces - they define how other modules (clients) can use a particular module. Typically, an interface to a module includes rules on how a module can take input from and how it gives output back to its clients. A client can be a human, in which case we also call these user interfaces. Even smaller functional units such as functions/methods have clearly defined interfaces - a function/method’s definition (also known as a signature) states what parameters it can take as input and what it returns as an output.

There are various software architectures around defining different ways of dividing the code into smaller modules with well defined roles, for example:

- Model–View–Controller (MVC) architecture, which we will look into in detail and use for our software project,

- Service-oriented architecture (SOA), which separates code into distinct services, accessible over a network by consumers (users or other services) that communicate with each other by passing data in a well-defined, shared format (protocol),

- Client-server architecture, where clients request content or service from a server, initiating communication sessions with servers, which await incoming requests (e.g. email, network printing, the Internet),

- Multilayer architecture, is a type of architecture in which presentation, application processing and data management functions are split into distinct layers and may even be physically separated to run on separate machines - some more detail on this later in the course.

Model-View-Controller (MVC) Architecture

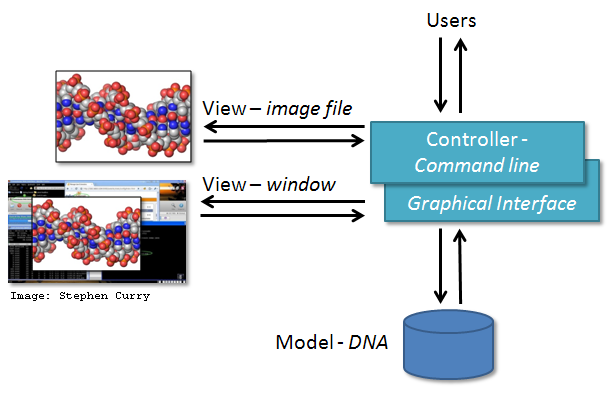

MVC architecture divides the related program logic into three interconnected modules:

- Model (data)

- View (client interface), and

- Controller (processes that handle input/output and manipulate the data).

Model represents the data used by a program and also contains operations/rules for manipulating and changing the data in the model. This may be a database, a file, a single data object or a series of objects - for example a table representing patients’ data.

View is the means of displaying data to users/clients within an application (i.e. provides visualisation of the state of the model). For example, displaying a window with input fields and buttons (Graphical User Interface, GUI) or textual options within a command line (Command Line Interface, CLI) are examples of Views. They include anything that the user can see from the application. While building GUIs is not the topic of this course, we will cover building CLIs in Python in later episodes.

Controller manipulates both the Model and the View. It accepts input from the View and performs the corresponding action on the Model (changing the state of the model) and then updates the View accordingly. For example, on user request, Controller updates a picture on a user’s GitHub profile and then modifies the View by displaying the updated profile back to the user.

MVC Examples

MVC architecture can be applied in scientific applications in the following manner. Model comprises those parts of the application that deal with some type of scientific processing or manipulation of the data, e.g. numerical algorithm, simulation, DNA. View is a visualisation, or format, of the output, e.g. graphical plot, diagram, chart, data table, file. Controller is the part that ties the scientific processing and output parts together, mediating input and passing it to the model or view, e.g. command line options, mouse clicks, input files. For example, the diagram below depicts the use of MVC architecture for the DNA Guide Graphical User Interface application.

Exercise: MVC Application Examples From your Work

Think of some other examples from your work or life where MVC architecture may be suitable or have a discussion with your fellow learners.

Solution

MVC architecture is a popular choice when designing web and mobile applications. Users interact with a web/mobile application by sending various requests to it. Forms to collect users inputs/requests together with the info returned and displayed to the user as a result represent the View. Requests are processed by the Controller, which interacts with the Model to retrieve or update the underlying data. For example, a user may request to view its profile. The Controller retrieves the account information for the user from the Model and passes it to the View for rendering. The user may further interact with the application by asking it to update its personal information. Controller verifies the correctness of the information (e.g. the password satisfies certain criteria, postal address and phone number are in the correct format, etc.) and passes it to the Model for permanent storage. The View is then updated accordingly and the user sees its updated profile details.

Note that not everything fits into the MVC architecture but it is still good to think about how things could be split into smaller units. For a few more examples, have a look at this short article on MVC from CodeAcademy.

Separation of Concerns

Separation of concerns is important when designing software architectures in order to reduce the code’s complexity. Note, however, there are limits to everything - and MVC architecture is no exception. Controller often transcends into Model and View and a clear separation is sometimes difficult to maintain. For example, the Command Line Interface provides both the View (what user sees and how they interact with the command line) and the Controller (invoking of a command) aspects of a CLI application. In Web applications, Controller often manipulates the data (received from the Model) before displaying it to the user or passing it from the user to the Model.

Our Project’s MVC Architecture

Our software project uses the MVC architecture. The file inflammation-analysis.py is the Controller module that

performs basic statistical analysis over patient data and provides the main

entry point into the application. The View and Model modules are contained

in the files views.py and models.py, respectively, and are conveniently named. Data underlying the Model is

contained within the directory data - as we have seen already it contains several files with patients’ daily inflammation information.

We will revisit the software architecture and MVC topics once again in later episodes when we talk in more detail about software’s business/user/solution requirements and software design. We now proceed to set up our virtual development environment and start working with the code using a more convenient graphical tool - IDE PyCharm.

Key Points

Programming interfaces define how individual modules within a software application interact among themselves or how the application itself interacts with its users.

MVC is a software design architecture which divides the application into three interconnected modules: Model (data), View (user interface), and Controller (input/output and data manipulation).

The software project we use throughout this course is an example of an MVC application that manipulates patients’ inflammation data and performs basic statistical analysis using Python.

Collaborative Software Development Using Git and Bitbucket

Overview

Teaching: 35 min

Exercises: 0 minQuestions

What are Git branches and why are they useful for code development?

What are some best practices when developing software collaboratively using Git?

Objectives

Commit changes in a software project to a local repository and publish them in a remote repository on Bitbucket

Create branches for managing different threads of code development

Learn to use feature branch workflow to effectively collaborate with a team on a software project

Introduction

Here we will learn how to use the feature branch workflow to effectively collaborate with a team on a software project.

Firstly, let’s remind ourselves how to work with Git from the command line.

Git Refresher

Git is a version control system for tracking changes in computer files and coordinating work on those files among multiple people. It is primarily used for source code management in software development but it can be used to track changes in files in general - it is particularly effective for tracking text-based files (e.g. source code files, CSV, Markdown, HTML, CSS, Tex, etc. files).

Git has several important characteristics:

- support for non-linear development allowing you and your colleagues to work on different parts of a project concurrently,

- support for distributed development allowing for multiple people to be working on the same project (even the same file) at the same time,

- every change recorded by Git remains part of the project history and can be retrieved at a later date, so even if you make a mistake you can revert to a point before it.

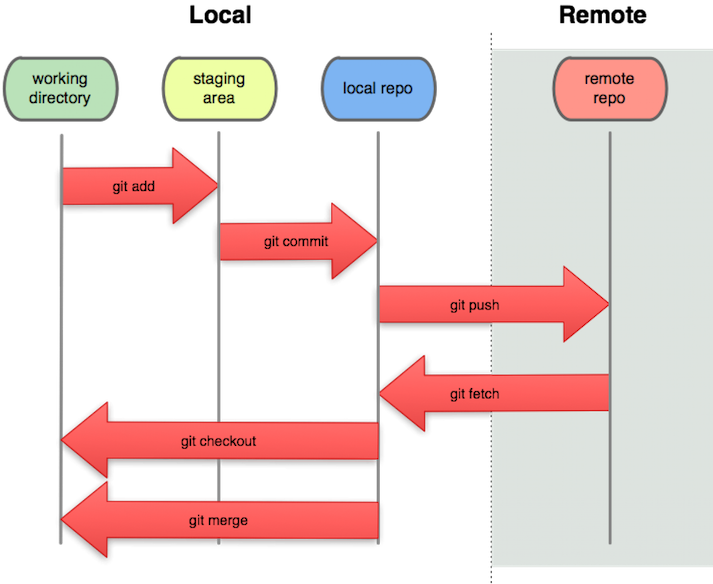

The diagram below shows a typical software development lifecycle with Git and the commonly used commands to interact with different parts of Git infrastructure, such as:

- working directory - a directory (including any subdirectories) where your project files live and where you are currently working.

It is also known as the “untracked” area of Git. Any changes to files will be marked by Git in the working directory.

If you make changes to the working directory and do not explicitly tell Git to save them - you will likely lose those

changes. Using

git add filenamecommand, you tell Git to start tracking changes to filefilenamewithin your working directory. - staging area (index) - once you tell Git to start tracking changes to files (with

git add filenamecommand), Git saves those changes in the staging area. Each subsequent change to the same file needs to be followed by anothergit add filenamecommand to tell Git to update it in the staging area. To see what is in your working directory and staging area at any moment (i.e. what changes is Git tracking), run the commandgit status. - local repository - stored within the

.gitdirectory of your project, this is where Git wraps together all your changes from the staging area and puts them using thegit commitcommand. Each commit is a new, permanent snapshot (checkpoint, record) of your project in time, which you can share or revert back to. - remote repository - this is a version of your project that is hosted somewhere on the Internet (e.g. on Bitbucket, GitHub, GitLab or somewhere else). While your project is nicely version-controlled in your local repository, and you have snapshots of its versions from the past, if your machine crashes - you still may lose all your work. Working with a remote repository involves pushing your changes and pulling other people’s changes to keep your local repository in sync in order to collaborate with others and to backup your work on a different machine.

Software development lifecycle with Git from PNGWing

Checking-in Changes to Our Project

First make sure that you have some changes in your project.

Update the README.md file, for example add a small description of how users can run your program.

Check the status:

$ git status

On branch main

Your branch is up to date with 'origin/main'.

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: README.md

no changes added to commit (use "git add" and/or "git commit -a")

As expected, Git is telling us that we have some changes present in our working directory which we have not staged nor committed to our local repository yet.

To commit the changes in README.md to the local repository, we first have to add these files to

staging area to prepare them for committing. We can do that at the same time as:

$ git add README.md

Now we can commit them to the local repository with:

$ git commit -m "Initial commit of README.md."

Remember to use meaningful messages for your commits.

So far we have been working in isolation - all the changes we have done are still only stored locally on our individual

machines. In order to share our work with others, we should push our changes to the remote repository on Bitbucket.

Before we push our changes however, we should first do a git pull.

This is considered best practice, since any changes made to the repository - notably by other people - may impact the changes we are about to push.

This could occur, for example, by two collaborators making different changes to the same lines in a file. By pulling first,

we are made aware of any changes made by others, in particular if there are any conflicts between their changes and ours.

$ git pull

Now we’ve ensured our repository is synchronised with the remote one, we can now push our changes. So, when you run the command below:

$ git push origin main

Authentication Errors

If you get a warning that HTTPS access is deprecated, or a token is required, then you accidentally cloned the repository using HTTPS and not SSH. You can fix this from the command line by resetting the remote repository URL setting on your local repo:

$ git remote set-url origin <YOUR_PROJECT_SSH_URL>

In the above command,

origin is an alias for the remote repository you used when cloning the project locally (it is called that

by convention and set up automatically by Git when you run git clone remote_url command to replicate a remote

repository locally); main is the name of our

main (and currently only) development branch.

Git Remotes

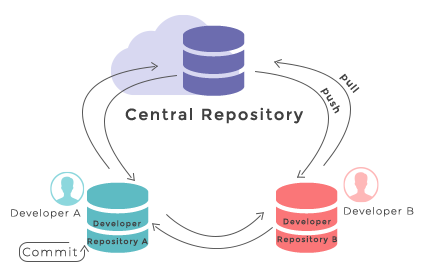

Note that systems like Git allow us to synchronise work between any two or more copies of the same repository - the ones that are not located on your machine are “Git remotes” for you. In practice, though, it is easiest to agree with your collaborators to use one copy as a central hub (such as Bitbucket), where everyone pushes their changes to. This also avoid risks associated with keeping the “central copy” on someone’s laptop. You can have more than one remote configured for your local repository, each of which generally is either read-only or read/write for you. Collaborating with others involves managing these remote repositories and pushing and pulling information to and from them when you need to share work.

Git - distributed version control system

From W3Docs (freely available)

Git Branches

When we do git status, Git also tells us that we are currently on the main branch of the project.

A branch is one version of your project (the files in your repository) that can contain its own set of commits.

We can create a new branch, make changes to the code which we then commit to the branch, and, once we are happy

with those changes, merge them back to the main branch. To see what other branches are available, do:

$ git branch

* main

At the moment, there’s only one branch (main) and hence only one version of the code available. When you create a

Git repository for the first time, by default you only get one version (i.e. branch) - main. Let’s have a look at

why having different branches might be useful.

Feature Branch Software Development Workflow

While it is technically OK to commit your changes directly to main branch, and you may often find yourself doing so

for some minor changes, the best practice is to use a new branch for each separate and self-contained

unit/piece of work you want to

add to the project. This unit of work is also often called a feature and the branch where you develop it is called a

feature branch. Each feature branch should have its own meaningful name - indicating its purpose (e.g. “issue23-fix”). If we keep making changes

and pushing them directly to main branch on Bitbucket, then anyone who downloads our software from there will get all of our

work in progress - whether or not it’s ready to use! So, working on a separate branch for each feature you are adding is

good for several reasons:

- it enables the main branch to remain stable while you and the team explore and test the new code on a feature branch,

- it enables you to keep the untested and not-yet-functional feature branch code under version control and backed up,

- you and other team members may work on several features at the same time independently from one another,

- if you decide that the feature is not working or is no longer needed - you can easily and safely discard that branch without affecting the rest of the code.

Branches are commonly used as part of a feature-branch workflow, shown in the diagram below.

Git feature branches

Adapted from Git Tutorial by sillevl (Creative Commons Attribution 4.0 International License)

In the software development workflow, we typically have a main branch which is the version of the code that

is tested, stable and reliable. Then, we normally have a development branch

(called develop or dev by convention) that we use for work-in-progress

code. As we work on adding new features to the code, we create new feature branches that first get merged into

develop after a thorough testing process. After even more testing - develop branch will get merged into main.

The points when feature branches are merged to develop, and develop to main

depend entirely on the practice/strategy established in the team. For example, for smaller projects (e.g. if you are

working alone on a project or in a very small team), feature branches sometimes get directly merged into main upon testing,

skipping the develop branch step. In other projects, the merge into main happens only at the point of making a new

software release. Whichever is the case for you, a good rule of thumb is - nothing that is broken should be in main.

Creating Branches

Let’s create a develop branch to work on:

$ git branch develop

This command does not give any output, but if we run git branch again, without giving it a new branch name, we can see

the list of branches we have - including the new one we have just made.

$ git branch

develop

* main

The * indicates the currently active branch. So how do we switch to our new branch? We use the git checkout

command with the name of the branch:

$ git checkout develop

Switched to branch 'develop'

Create and Switch to Branch Shortcut

A shortcut to create a new branch and immediately switch to it:

$ git checkout -b develop

Updating Branches

If we start updating and committing files now, the commits will happen on the develop branch and will not affect

the version of the code in main. We add and commit things to develop branch in the same way as we do to main.

Let’s make a small modification to inflammation/models.py, and, say, change the spelling of “2d” to

“2D” in docstrings for functions daily_mean(), daily_max() and daily_min().

If we do:

$ git status

On branch develop

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git checkout -- <file>..." to discard changes in working directory)

modified: inflammation/models.py

no changes added to commit (use "git add" and/or "git commit -a")

Git is telling us that we are on branch develop and which tracked files have been modified in our working directory.

We can now add and commit the changes in the usual way.

$ git add inflammation/models.py

$ git commit -m "Spelling fix"

Currently Active Branch

Remember,

addandcommitcommands always act on the currently active branch. You have to be careful and aware of which branch you are working with at any given moment.git statuscan help with that, and you will find yourself invoking it very often.

Pushing New Branch Remotely

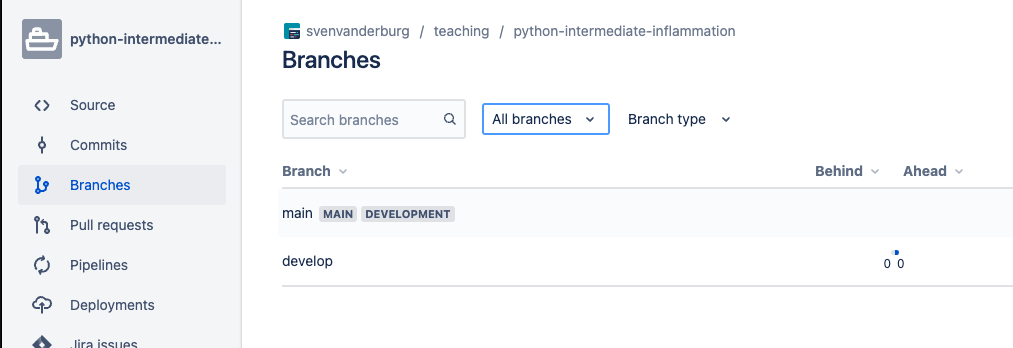

We push the contents of the develop branch to Bitbucket in the same way as we pushed the main branch. However, as we have

just created this branch locally, it still does not exist in our remote repository.

To push a new local branch remotely for the first time, you could use the -u switch and the name of the branch you

are creating and pushing to:

$ git push -u origin develop

Git Push With

-uSwitchUsing the

-uswitch with thegit pushcommand is a handy shortcut for: (1) creating the new remote branch and (2) setting your local branch to automatically track the remote one at the same time. You need to use the-uswitch only once to set up that association between your branch and the remote one explicitly. After that you could simply usegit pushwithout specifying the remote repository, if you wished so. We still prefer to explicitly state this information in commands.

Let’s confirm that the new branch develop now exist remotely on Bitbucket too. Click ‘Branches’ in the left navigation bar:

Now the others can check out the

Now the others can check out the develop branch too and continue to develop code on it.

After the initial push of the new

branch, each next time we push to it in the usual manner (i.e. without the -u switch):

$ git push origin develop

What is the Relationship Between Originating and New Branches?

It’s natural to think that new branches have a parent/child relationship with their originating branch, but in actual Git terms, branches themselves do not have parents but single commits do. Any commit can have zero parents (a root, or initial, commit), one parent (a regular commit), or multiple parents (a merge commit), and using this structure, we can build a ‘view’ of branches from a set of commits and their relationships. A common way to look at it is that Git branches are really only lightweight, movable pointers to commits. So as a new commit is added to a branch, the branch pointer is moved to the new commit.

What this means is that when you accomplish a merge between two branches, Git is able to determine the common ‘commit ancestor’ through the commits in a ‘branch’, and use that common ancestor to determine which commits need to be merged onto the destination branch. It also means that, in theory, you could merge any branch with any other at any time… although it may not make sense to do so!

Merging Into Main Branch

Once you have tested your changes on the develop branch, you will want to merge them onto the main branch.

To do so, make sure you have all your changes committed and switch to main:

$ git checkout main

Switched to branch 'main'

Your branch is up to date with 'origin/main'.

To merge the develop branch on top of main do:

$ git merge develop

Updating 05e1ffb..be60389

Fast-forward

inflammation/models.py | 6 +++---

1 files changed, 3 insertions(+), 3 deletions(-)

If there are no conflicts, Git will merge the branches without complaining and replay all commits from

develop on top of the last commit from main. If there are merge conflicts (e.g. a team collaborator modified the same

portion of the same file you are working on and checked in their changes before you), the particular files with conflicts

will be marked and you will need to resolve those conflicts and commit the changes before attempting to merge again.

Since we have no conflicts, we can now push the main branch to the remote repository:

git push origin main

All Branches Are Equal

In Git, all branches are equal - there is nothing special about the

mainbranch. It is called that by convention and is created by default, but it can also be called something else. A good example isgh-pagesbranch which is the main branch for website projects hosted on Bitbucket (rather thanmain, which can be safely deleted for such projects).

Keeping Main Branch Stable

Good software development practice is to keep the

mainbranch stable while you and the team develop and test new functionalities on feature branches (which can be done in parallel and independently by different team members). The next step is to merge feature branches onto thedevelopbranch, where more testing can occur to verify that the new features work well with the rest of the code (and not just in isolation). We talk more about different types of code testing in one of the following episodes.

Key Points

A branch is one version of your project that can contain its own set of commits.

Feature branches enable us to develop / explore / test new code features without affecting the stable

maincode.

Python Code Style Conventions

Overview

Teaching: 20 min

Exercises: 15 minQuestions

Why should you follow software code style conventions?

Who is setting code style conventions?

What code style conventions exist for Python?

Objectives

Understand the benefits of following community coding conventions

Introduction

We now have all the tools we need for software development and are raring to go. But before you dive into writing some more code and sharing it with others, ask yourself what kind of code should you be writing and publishing? It may be worth spending some time learning a bit about Python coding style conventions to make sure that your code is consistently formatted and readable by yourself and others.

“Any fool can write code that a computer can understand. Good programmers write code that humans can understand.” - Martin Fowler, British software engineer, author and international speaker on software development

Python Coding Style Guide

One of the most important things we can do to make sure our code is readable by others (and ourselves a few months down the line) is to make sure that it is descriptive, cleanly and consistently formatted and uses sensible, descriptive names for variable, function and module names. In order to help us format our code, we generally follow guidelines known as a style guide. A style guide is a set of conventions that we agree upon with our colleagues or community, to ensure that everyone contributing to the same project is producing code which looks similar in style. While a group of developers may choose to write and agree upon a new style guide unique to each project, in practice many programming languages have a single style guide which is adopted almost universally by the communities around the world. In Python, although we do have a choice of style guides available, the PEP 8 style guide is most commonly used. PEP here stands for Python Enhancement Proposals; PEPs are design documents for the Python community, typically specifications or conventions for how to do something in Python, a description of a new feature in Python, etc.

Style consistency

One of the key insights from Guido van Rossum, one of the PEP 8 authors, is that code is read much more often than it is written. Style guidelines are intended to improve the readability of code and make it consistent across the wide spectrum of Python code. Consistency with the style guide is important. Consistency within a project is more important. Consistency within one module or function is the most important. However, know when to be inconsistent – sometimes style guide recommendations are just not applicable. When in doubt, use your best judgment. Look at other examples and decide what looks best. And don’t hesitate to ask!

Editors or integrated development environments (IDEs) usually highlight the language constructs

(reserved words) and syntax errors to help us with coding. If they do not have that functionality out-of-the-box,

you can often install helper programs, so-called “linters”, like pylint,

or run them independently from the command line. They can also give us recommendations for formatting the

code - these recommendations are mostly taken from the PEP 8 style guide. We will discuss linting tools

like pylint in more detail later.

A full list of style guidelines for this style is available from the PEP 8 website; here we highlight a few.

Indentation

Python is a kind of language that uses indentation as a way of grouping statements that belong to a particular block of code. Spaces are the recommended indentation method in Python code. The guideline is to use 4 spaces per indentation level - so 4 spaces on level one, 8 spaces on level two and so on. Many people prefer the use of tabs to spaces to indent the code for many reasons (e.g. additional typing, easy to introduce an error by missing a single space character, accessibility for individuals using screen readers, etc.) and do not follow this guideline. Whether you decide to follow this guideline or not, be consistent and follow the style already used in the project.

Indentation in Python 2 vs Python 3

Python 2 allowed code indented with a mixture of tabs and spaces. Python 3 disallows mixing the use of tabs and spaces for indentation. Whichever you choose, be consistent throughout the project.

Some editors have built-in support for converting tab indentation to spaces “under the hood” for Python code in order to conform to PEP8. So, you can type a tab character and they will automatically convert it to 4 spaces. You can control the amount of spaces used to replace one tab character or you can decide to keep the tab character altogether and prevent automatic conversion.

You can also tell the editor to show non-printable characters if you are ever unsure what character exactly is being used.

There are more complex rules on indenting single units of code that continue over several lines, e.g. function,

list or dictionary definitions can all take more than one line. The preferred way of wrapping such long lines is by

using Python’s implied line continuation inside delimiters such as parentheses (()), brackets ([]) and braces

({}), or a hanging indent.

# Add an extra level of indentation (extra 4 spaces) to distinguish arguments from the rest of the code that follows

def long_function_name(

var_one, var_two, var_three,

var_four):

print(var_one)

# Aligned with opening delimiter

foo = long_function_name(var_one, var_two,

var_three, var_four)

# Use hanging indents to add an indentation level like paragraphs of text where all the lines in a paragraph are

# indented except the first one

foo = long_function_name(

var_one, var_two,

var_three, var_four)

# Using hanging indent again, but closing bracket aligned with the first non-blank character of the previous line

a_long_list = [

[[1, 2, 3], [4, 5, 6], [7, 8, 9]], [[0.33, 0.66, 1], [0.66, 0.83, 1], [0.77, 0.88, 1]]

]

# Using hanging indent again, but closing bracket aligned with the start of the multiline contruct

a_long_list2 = [

1,

2,

3,

# ...

79

]

More details on good and bad practices for continuation lines can be found in PEP 8 guideline on indentation.

Maximum Line Length

All lines should be up to 80 characters long; for lines containing comments or docstrings (to be covered later) the

line length limit should be 73 - see this discussion for reasoning behind these numbers. Some teams strongly prefer a longer line length, and seemed to have settled on the

length of 100. Long lines of code can be broken over multiple lines by wrapping expressions in delimiters, as

mentioned above (preferred method), or using a backslash (\) at the end of the line to indicate

line continuation (slightly less preferred method).

# Using delimiters ( ) to wrap a multi-line expression

if (a == True and

b == False):

# Using a backslash (\) for line continuation

if a == True and \

b == False:

Should a Line Break Before or After a Binary Operator?

Lines should break before binary operators so that the operators do not get scattered across different columns on the screen. In the example below, the eye does not have to do the extra work to tell which items are added and which are subtracted:

# PEP 8 compliant - easy to match operators with operands

income = (gross_wages

+ taxable_interest

+ (dividends - qualified_dividends)

- ira_deduction

- student_loan_interest)

Blank Lines

Top-level function and class definitions should be surrounded with two blank lines. Method definitions inside a class should be surrounded by a single blank line. You can use blank lines in functions, sparingly, to indicate logical sections.

Whitespace in Expressions and Statements

Avoid extraneous whitespace in the following situations:

- immediately inside parentheses, brackets or braces

# PEP 8 compliant: my_function(colour[1], {id: 2}) # Not PEP 8 compliant: my_function( colour[ 1 ], { id: 2 } ) - Immediately before a comma, semicolon, or colon (unless doing slicing where the colon acts like a binary operator

in which case it should should have equal amounts of whitespace on either side)

# PEP 8 compliant: if x == 4: print(x, y); x, y = y, x # Not PEP 8 compliant: if x == 4 : print(x , y); x , y = y, x - Immediately before the open parenthesis that starts the argument list of a function call

# PEP 8 compliant: my_function(1) # Not PEP 8 compliant: my_function (1) - Immediately before the open parenthesis that starts an indexing or slicing

# PEP 8 compliant: my_dct['key'] = my_lst[id] first_char = my_str[:, 1] # Not PEP 8 compliant: my_dct ['key'] = my_lst [id] first_char = my_str [:, 1] - More than one space around an assignment (or other) operator to align it with another

# PEP 8 compliant: x = 1 y = 2 student_loan_interest = 3 # Not PEP 8 compliant: x = 1 y = 2 student_loan_interest = 3 - Avoid trailing whitespace anywhere - it is not necessary and can cause errors. For example, if you use

backslash (

\) for continuation lines and have a space after it, the continuation line will not be interpreted correctly. - Surround these binary operators with a single space on either side: assignment (=), augmented assignment (+=, -= etc.), comparisons (==, <, >, !=, <>, <=, >=, in, not in, is, is not), booleans (and, or, not).

- Don’t use spaces around the = sign when used to indicate a keyword argument assignment or to indicate a

default value for an unannotated function parameter

# PEP 8 compliant use of spaces around = for variable assignment axis = 'x' angle = 90 size = 450 name = 'my_graph' # PEP 8 compliant use of no spaces around = for keyword argument assignment in a function call my_function( 1, 2, axis=axis, angle=angle, size=size, name=name)

String Quotes

In Python, single-quoted strings and double-quoted strings are the same. PEP8 does not make a recommendation for this apart from picking one rule and consistently sticking to it. When a string contains single or double quote characters, use the other one to avoid backslashes in the string as it improves readability. You have no choice to escape quotes if your string uses both, though.

# Escaping quotes makes this is harder to read:

cocktail = 'Planter\'s Punch'

mlk_quote = "\"I have a dream\", he said."

# This is easier to read:

cocktail = "Planter's Punch"

mlk_quote = '"I have a dream", he said.'

# For mixed characters, you need to escape one of quote types.

mixed = 'It\'s a so-called "cat".'

Naming Conventions

There are a lot of different naming styles in use, including:

- b (single lowercase letter)

- B (single uppercase letter)

- lowercase

- lower_case_with_underscores

- UPPERCASE

- UPPER_CASE_WITH_UNDERSCORES

- CapitalisedWords (or PascalCase) (note: when using acronyms in CapitalisedWords, capitalise all the letters of the acronym, e.g HTTPServerError)

- camelCase (differs from CapitalisedWords/PascalCase by the initial lowercase character)

- Capitalised_Words_With_Underscores

As with other style guide recommendations - consistency is key. Pick one and stick to it, or follow the one already established if joining a project mid-way. Some things to be wary of when naming things in the code:

- Avoid using the characters ‘l’ (lowercase letter L), ‘O’ (uppercase letter o), or ‘I’ (uppercase letter i) as single character variable names. In some fonts, these characters are indistinguishable from the numerals one and zero. When tempted to use ‘l’, use ‘L’ instead.

- Avoid using non-ASCII (e.g. UNICODE) characters for identifiers

- If your audience is international and English is the common language, try to use English words for identifiers and comments whenever possible but try to avoid abbreviations/local slang as they may not be understood by everyone. Also consider sticking with either ‘American’ or ‘British’ English spellings and try not to mix the two.

Function, Variable, Class, Module, Package Naming

- Function and variable names should be lowercase, with words separated by underscores as necessary to improve readability.

- Class names should normally use the CapitalisedWords convention.

- Modules should have short, all-lowercase names. Underscores can be used in the module name if it improves readability.

- Packages should also have short, all-lowercase names, although the use of underscores is discouraged.

A more detailed guide on naming functions, modules, classes and variables is available from PEP8.

Comments

Comments allow us to provide the reader with additional information on what the code does - reading and understanding source code is slow, laborious and can lead to misinterpretation, plus it is always a good idea to keep others in mind when writing code. A good rule of thumb is to assume that someone will always read your code at a later date, and this includes a future version of yourself. It can be easy to forget why you did something a particular way in six months’ time. Write comments as complete sentences and in English unless you are 100% sure the code will never be read by people who don’t speak your language.

The Good, the Bad, and the Ugly Comments

As a side reading, check out the ‘Putting comments in code: the good, the bad, and the ugly’ blogpost. Remember - a comment should answer the ‘why’ question”. Occasionally the “what” question. The “how” question should be answered by the code itself.

Block comments generally apply to some (or all) code that follows them, and are indented to the same level as that

code. Each line of a block comment starts with a # and a single space (unless it is indented text inside the comment).

def fahr_to_cels(fahr):

# Block comment example: convert temperature in Fahrenheit to Celsius

cels = (fahr + 32) * (5 / 9)

return cels

An inline comment is a comment on the same line as a statement. Inline comments should be separated by at least two

spaces from the statement. They should start with a # and a single space and should be used sparingly.

def fahr_to_cels(fahr):

cels = (fahr + 32) * (5 / 9) # Inline comment example: convert temperature in Fahrenheit to Celsius

return cels

Python doesn’t have any multi-line comments, like you may have seen in other languages like C++ or Java. However, there are ways to do it using docstrings as we’ll see in a moment.

The reader should be able to understand a single function or method from its code and its comments, and should not have to look elsewhere in the code for clarification. The kind of things that need to be commented are:

- Why certain design or implementation decisions were adopted, especially in cases where the decision may seem counter-intuitive

- The names of any algorithms or design patterns that have been implemented

- The expected format of input files or database schemas

However, there are some restrictions. Comments that simply restate what the code does are redundant, and comments must be accurate and updated with the code, because an incorrect comment causes more confusion than no comment at all.

Exercise: Improve Code Style of Our Project

Let’s look at improving the coding style of our project. First create a new feature branch called

style-fixesoff ourdevelopbranch and switch to it (from the project root):$ git checkout develop $ git checkout -b style-fixesNext look at the

inflammation-analysis.pyfile and identify where the above guidelines have not been followed. Tip: All IDEs can be configured to automatically highlight most code style violoations! Fix the discovered inconsistencies and commit them to the feature branch.Solution

Ideally, modify

inflammation-analysis.pywith an editor that helpfully marks inconsistencies with coding guidelines by underlying them. You can also usepylintfrom the command line. There are a few things to fix ininflammation-analysis.py, for example:

Line 24 in

inflammation-analysis.pyis too long and not very readable. A better style would be to use multiple lines and hanging indent, with the closing brace `}’ aligned either with the first non-whitespace character of the last line of list or the first character of the line that starts the multiline construct or simply moved to the end of the previous line. All three acceptable modifications are shown below.# Using hanging indent, with the closing '}' aligned with the first non-blank character of the previous line view_data = { 'average': models.daily_mean(inflammation_data), 'max': models.daily_max(inflammation_data), 'min': models.daily_min(inflammation_data) }# Using hanging indent with the, closing '}' aligned with the start of the multiline contruct view_data = { 'average': models.daily_mean(inflammation_data), 'max': models.daily_max(inflammation_data), 'min': models.daily_min(inflammation_data) }# Using hanging indent where all the lines of the multiline contruct are indented except the first one view_data = { 'average': models.daily_mean(inflammation_data), 'max': models.daily_max(inflammation_data), 'min': models.daily_min(inflammation_data)}Variable ‘InFiles’ in

inflammation-analysis.pyuses CapitalisedWords naming convention which is recommended for class names but not variable names. By convention, variable names should be in lowercase with optional underscores so you should rename the variable ‘InFiles’ to, e.g., ‘infiles’ or ‘in_files’.There is an extra blank line on line 20 in

inflammation-analysis.py. Normally, you should not use blank lines in the middle of the code unless you want to separate logical units - in which case only one blank line is used.Only one blank line after the end of definition of function

mainand the rest of the code on line 30 ininflammation-analysis.py- should be two blank lines. Your editor might have pointed this out to you.Finally, let’s add and commit our changes to the feature branch. We will check the status of our working directory first.

$ git statusOn branch style-fixes Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: inflammation-analysis.py no changes added to commit (use "git add" and/or "git commit -a")Git tells us we are on branch

style-fixesand that we have unstaged and uncommited changes toinflammation-analysis.py. Let’s commit them to the local repository.$ git add inflammation-analysis.py $ git commit -m "Code style fixes."

Optional Exercise: Improve Code Style of Your Other Python Projects

If you have another Python project, check to which extent it conforms to PEP8 coding style. Or if you have projects in another programming language: see how they conform to the coding style guide of that language.

Documentation Strings aka Docstrings

If the first thing in a function is a string that is not assigned to a variable, that string is attached to the function as its documentation. Consider the following code implementing function for calculating the nth Fibonacci number:

def fibonacci(n):

"""Calculate the nth Fibonacci number.

A recursive implementation of Fibonacci array elements.

:param n: integer

:raises ValueError: raised if n is less than zero

:returns: Fibonacci number

"""

if n < 0:

raise ValueError('Fibonacci is not defined for N < 0')

if n == 0:

return 0

if n == 1:

return 1

return fibonacci(n - 1) + fibonacci(n - 2)

Note here we are explicitly documenting our input variables, what is returned by the function, and also when the

ValueError exception is raised. Along with a helpful description of what the function does, this information can

act as a contract for readers to understand what to expect in terms of behaviour when using the function,

as well as how to use it.

A special comment string like this is called a docstring. We do not need to use triple quotes when writing one, but

if we do, we can break the text across multiple lines. Docstrings can also be used at the start of a Python module (a file

containing a number of Python functions) or at the start of a Python class (containing a number of methods) to list

their contents as a reference. You should not confuse docstrings with comments though - docstrings are context-dependent and should only

be used in specific locations (e.g. at the top of a module and immediately after class and def keywords as mentioned).

Using triple quoted strings in locations where they will not be interpreted as docstrings or

using triple quotes as a way to ‘quickly’ comment out an entire block of code is considered bad practice.

In our example case, we used

the Sphynx/ReadTheDocs docstring style formatting

for the param, raises and returns - other docstring formats exist as well.

Python PEP 257 - Recommendations for Docstrings

PEP 257 is another one of Python Enhancement Proposals and this one deals with docstring conventions to standardise how they are used. For example, on the subject of module-level docstrings, PEP 257 says:

The docstring for a module should generally list the classes, exceptions and functions (and any other objects) that are exported by the module, with a one-line summary of each. (These summaries generally give less detail than the summary line in the object's docstring.) The docstring for a package (i.e., the docstring of the package's `__init__.py` module) should also list the modules and subpackages exported by the package.Note that

__init__.pyfile used to be a required part of a package (pre Python 3.3) where a package was typically implemented as a directory containing an__init__.pyfile which got implicitly executed when a package was imported.

So, at the beginning of a module file we can just add a docstring explaining the nature of a module. For example, if

fibonacci() was included in a module with other functions, our module could have at the start of it:

"""A module for generating numerical sequences of numbers that occur in nature.

Functions:

fibonacci - returns the Fibonacci number for a given integer

golden_ratio - returns the golden ratio number to a given Fibonacci iteration

...

"""

...

The docstring for a function or a module is returned when

calling the help function and passing its name - for example from the interactive Python console/terminal available

from the command line or when rendering code documentation online

(e.g. see Python documentation).

Some editors also display the docstring for a function/module in a little help popup window when using tab-completion.

help(fibonacci)

Exercise: Fix the Docstrings

Look into

models.pyand improve docstrings for functionsdaily_mean,daily_min,daily_max. Commit those changes to feature branchstyle-fixes.Solution

For example, the improved docstrings for the above functions would contain explanations for parameters and return values.

def daily_mean(data): """Calculate the daily mean of a 2D inflammation data array for each day. :param data: A 2D data array with inflammation data (each row contains measurements for a single patient across all days). :returns: An array of mean values of measurements for each day. """ return np.mean(data, axis=0)def daily_max(data): """Calculate the daily maximum of a 2D inflammation data array for each day. :param data: A 2D data array with inflammation data (each row contains measurements for a single patient across all days). :returns: An array of max values of measurements for each day. """ return np.max(data, axis=0)def daily_min(data): """Calculate the daily minimum of a 2D inflammation data array for each day. :param data: A 2D data array with inflammation data (each row contains measurements for a single patient across all days). :returns: An array of minimum values of measurements for each day. """ return np.min(data, axis=0)Once we are happy with modifications, as usual before staging and commit our changes, we check the status of our working directory:

$ git statusOn branch style-fixes Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: inflammation/models.py no changes added to commit (use "git add" and/or "git commit -a")As expected, Git tells us we are on branch

style-fixesand that we have unstaged and uncommited changes toinflammation/models.py. Let’s commit them to the local repository.$ git add inflammation/models.py $ git commit -m "Docstring improvements."

In the previous exercises, we made some code improvements on feature branch style-fixes. We have committed our

changes locally but have not pushed this branch remotely for others to have a look at our code before we merge it

onto the develop branch. Let’s do that now, namely:

- push

style-fixesto Bitbucket - merge

style-fixesintodevelop(once we are happy with the changes) - push updates to

developbranch to Bitbucket (to keep it up to date with the latest developments) - finally, merge

developbranch into the stablemainbranch

Here is a set commands that will achieve the above set of actions (remember to use git status often in between other

Git commands to double check which branch you are on and its status):

$ git push -u origin style-fixes

$ git checkout develop

$ git merge style-fixes

$ git push origin develop

$ git checkout main

$ git merge develop

$ git push origin main

Typical Code Development Cycle

What you’ve done in the exercises in this episode mimics a typical software development workflow - you work locally on code on a feature branch, test it to make sure it works correctly and as expected, then record your changes using version control and share your work with others via a centrally backed-up repository. Other team members work on their feature branches in parallel and similarly share their work with colleagues for discussions. Different feature branches from around the team get merged onto the development branch, often in small and quick development cycles. After further testing and verifying that no code has been broken by the new features - the development branch gets merged onto the stable main branch, where new features finally resurface to end-users in bigger “software release” cycles.

Key Points

Always assume that someone else will read your code at a later date, including yourself.

Community coding conventions help you create more readable software projects that are easier to contribute to.

Python Enhancement Proposals (or PEPs) describe a recommended convention or specification for how to do something in Python.

Style checking to ensure code conforms to coding conventions is often part of IDEs.

Consistency with the style guide is important - whichever style you choose.

Verifying Code Style Using Linters

Overview

Teaching: 15 min

Exercises: 10 minQuestions

What tools can help with maintaining a consistent code style?

How can we automate code style checking?

Objectives

Use code linting tools to verify a program’s adherence to a Python coding style convention.

Verifying Code Style Using Linters

Editors or integrated development environments (IDEs) can help us format our Python code in a consistent style.

This aids reusability, since consistent-looking code is easier to modify since it’s easier to read and understand.

We can also use tools, called code linters, to identify consistency issues in a report-style.

Linters analyse source code to identify and report on stylistic and even programming errors. Let’s look at a very well

used one of these called pylint.

First, let’s ensure we are on the style-fixes branch once again.

$ git checkout style-fixes

Pylint is just a Python package so we can install it in our virtual environment using:

$ pip3 install pylint

$ pylint --version

We should see the version of Pylint, something like:

pylint 2.13.3

...

Don’t forget to update your virtual Python environment with this package, if you use one.

Pylint is a command-line tool that can help our code in many ways:

- Check PEP8 compliance: whilst in-IDE context-sensitive highlighting helps us stay consistent with PEP8 as we write code, this tool provides a full report

- Perform basic error detection: Pylint can look for certain Python type errors

- Check variable naming conventions: Pylint often goes beyond PEP8 to include other common conventions, such as naming variables outside of functions in upper case

- Customisation: you can specify which errors and conventions you wish to check for, and those you wish to ignore

Pylint can also identify code smells.

How Does Code Smell?

There are many ways that code can exhibit bad design whilst not breaking any rules and working correctly. A code smell is a characteristic that indicates that there is an underlying problem with source code, e.g. large classes or methods, methods with too many parameters, duplicated statements in both if and else blocks of conditionals, etc. They aren’t functional errors in the code, but rather are certain structures that violate principles of good design and impact design quality. They can also indicate that code is in need of maintenance and refactoring.

The phrase has its origins in Chapter 3 “Bad smells in code” by Kent Beck and Martin Fowler in Fowler, Martin (1999). Refactoring. Improving the Design of Existing Code. Addison-Wesley. ISBN 0-201-48567-2.

Pylint recommendations are given as warnings or errors, and Pylint also scores the code with an overall mark.

We can look at a specific file (e.g. inflammation-analysis.py), or a module

(e.g. inflammation). Let’s look at our inflammation module and code inside it (namely models.py and views.py).

From the project root do:

$ pylint inflammation

You should see an output similar to the following:

************* Module inflammation.models

inflammation/models.py:5:82: C0303: Trailing whitespace (trailing-whitespace)

inflammation/models.py:6:66: C0303: Trailing whitespace (trailing-whitespace)

inflammation/models.py:34:0: C0305: Trailing newlines (trailing-newlines)

************* Module inflammation.views

inflammation/views.py:4:0: W0611: Unused numpy imported as np (unused-import)

------------------------------------------------------------------

Your code has been rated at 8.00/10 (previous run: 8.00/10, +0.00)

Your own outputs of the above commands may vary depending on how you have implemented and fixed the code in previous exercises and the coding style you have used.

The five digit codes, such as C0303, are unique identifiers for warnings, with the first character indicating

the type of warning. There are five different types of warnings that Pylint looks for, and you can get a summary of

them by doing:

$ pylint --long-help

Near the end you’ll see:

Output:

Using the default text output, the message format is :

MESSAGE_TYPE: LINE_NUM:[OBJECT:] MESSAGE

There are 5 kind of message types :

* (C) convention, for programming standard violation

* (R) refactor, for bad code smell

* (W) warning, for python specific problems

* (E) error, for probable bugs in the code

* (F) fatal, if an error occurred which prevented pylint from doing

further processing.

So for an example of a Pylint Python-specific warning, see the “W0611: Unused numpy imported

as np (unused-import)” warning.

It is important to note that while tools such as Pylint are great at giving you a starting point to consider how to improve your code, they won’t find everything that may be wrong with it.

How Does Pylint Calculate the Score?

The Python formula used is (with the variables representing numbers of each type of infraction and

statementindicating the total number of statements):10.0 - ((float(5 * error + warning + refactor + convention) / statement) * 10)For example, with a total of 31 statements of models.py and views.py, with a count of the errors shown above, we get a score of 8.00. Note whilst there is a maximum score of 10, given the formula, there is no minimum score - it’s quite possible to get a negative score!

Exercise: Further Improve Code Style of Our Project

Select and fix a few of the issues with our code that Pylint detected. Make sure you do not break the rest of the code in the process and that the code still runs. After making any changes, run Pylint again to verify you’ve resolved these issues.

Make sure you commit and push your list of packages and any file with further code style improvements you did and merge onto your development and main branches.

$ git add requirements.txt # Depending on your virtual Python environment this file could have a different name

$ git commit -m "Added Pylint library"

$ git push origin style-fixes

$ git checkout develop

$ git merge style-fixes

$ git push origin develop

$ git checkout main

$ git merge develop

$ git push origin main

Optional Exercise: Improve Code Style of Your Other rojects

If you have a project you are working on or you worked on in the past, run it past Pylint to see what issues with your code are detected, if any.

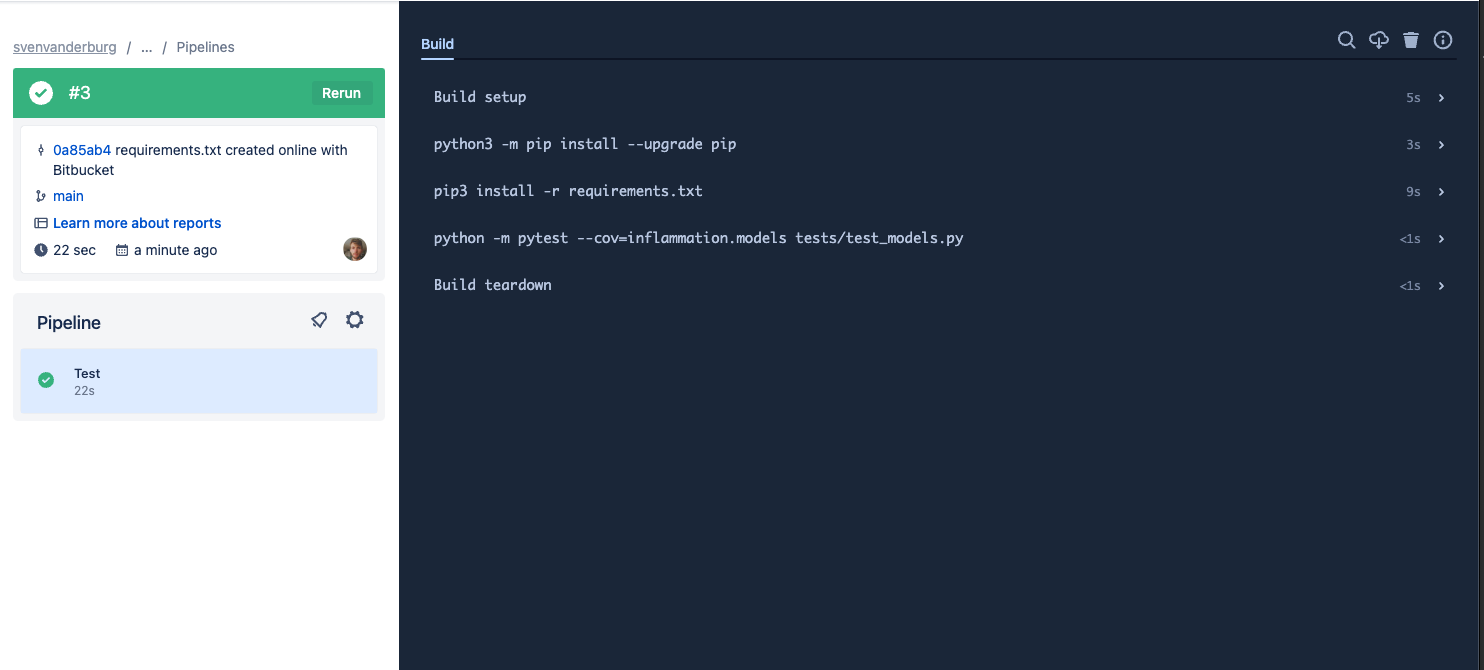

It is possible to automate these kind of code checks with Bitbucket’s Continuous Integration service Bitbucket Pipelines. We will get to that in the next seciont.

Key Points

Use linting tools on the command line (or via continuous integration) to automatically check your code style.

Section 2: Ensuring Correctness of Software at Scale

Overview

Teaching: 5 min

Exercises: 0 minQuestions

What should we do to ensure our code is correct?

Objectives

Introduce the testing tools, techniques, and infrastructure that will be used in this section.

We’ve just set up a suitable environment for the development of our software project and are ready to start coding. However, we want to make sure that the new code we contribute to the project is actually correct and is not breaking any of the existing code. So, in this section, we’ll look at testing approaches that can help us ensure that the software we write is behaving as intended, and how we can diagnose and fix issues once faults are found. Using such approaches requires us to change our practice of development. This can take time, but potentially saves us considerable time in the medium to long term by allowing us to more comprehensively and rapidly find such faults, as well as giving us greater confidence in the correctness of our code - so we should try and employ such practices early on. We will also make use of techniques and infrastructure that allow us to do this in a scalable, automated and more performant way as our codebase grows.

In this section we will:

- Make use of a test framework called Pytest, a free and open source Python library to help us structure and run automated tests.

- Design, write and run unit tests using Pytest to verify the correct behaviour of code and identify faults, making use of test parameterisation to increase the number of different test cases we can run.

- Automatically run a set of unit tests using Bitbucket Pipelines - a Continuous Integration infrastructure that allows us to automate tasks when things happen to our code, such as running those tests when a new commit is made to a code repository.

Key Points

Using testing requires us to change our practice of code development, but saves time in the long run by allowing us to more comprehensively and rapidly find faults in code, as well as giving us greater confidence in the correctness of our code.

The use of test techniques and infrastructures such as parameterisation and Continuous Integration can help scale and further automate our testing process.

Automatically Testing Software

Overview

Teaching: 30 min

Exercises: 20 minQuestions

Does the code we develop work the way it should do?

Can we (and others) verify these assertions for themselves?

To what extent are we confident of the accuracy of results that appear in publications?

Objectives

Explain the reasons why testing is important

Describe the three main types of tests and what each are used for

Implement and run unit tests to verify the correct behaviour of program functions

Introduction

Being able to demonstrate that a process generates the right results is important in any field of research, whether it’s software generating those results or not. So when writing software we need to ask ourselves some key questions:

- Does the code we develop work the way it should do?

- Can we (and others) verify these assertions for themselves?

- Perhaps most importantly, to what extent are we confident of the accuracy of results that software produces?

If we are unable to demonstrate that our software fulfills these criteria, why would anyone use it? Having well-defined tests for our software is useful for this, but manually testing software can prove an expensive process.

Automation can help, and automation where possible is a good thing - it enables us to define a potentially complex process in a repeatable way that is far less prone to error than manual approaches. Once defined, automation can also save us a lot of effort, particularly in the long run. In this episode we’ll look into techniques of automated testing to improve the predictability of a software change, make development more productive, and help us produce code that works as expected and produces desired results.

What Is Software Testing?

For the sake of argument, if each line we write has a 99% chance of being right, then a 70-line program will be wrong more than half the time. We need to do better than that, which means we need to test our software to catch these mistakes.

We can and should extensively test our software manually, and manual testing is well-suited to testing aspects such as graphical user interfaces and reconciling visual outputs against inputs. However, even with a good test plan, manual testing is very time consuming and prone to error. Another style of testing is automated testing, where we write code that tests the functions of our software. Since computers are very good and efficient at automating repetitive tasks, we should take advantage of this wherever possible.

There are three main types of automated tests:

- Unit tests are tests for fairly small and specific units of functionality, e.g. determining that a particular function returns output as expected given specific inputs.

- Functional or integration tests work at a higher level, and test functional paths through your code, e.g. given some specific inputs, a set of interconnected functions across a number of modules (or the entire code) produce the expected result. These are particularly useful for exposing faults in how functional units interact.

- Regression tests make sure that your program’s output hasn’t changed, for example after making changes your code to add new functionality or fix a bug.

For the purposes of this course, we’ll focus on unit tests. But the principles and practices we’ll talk about can be built on and applied to the other types of tests too.

Set Up a New Feature Branch for Writing Tests

We’re going to look at how to run some existing tests and also write some new ones, so let’s ensure we’re initially on our develop branch we created earlier. And then, we’ll create a new feature branch called test-suite off the develop branch - a common term we use to refer to sets of tests - that we’ll use for our test writing work:

$ git checkout develop

$ git branch test-suite

$ git checkout test-suite

Good practice is to write our tests around the same time we write our code on a feature branch. But since the code already exists, we’re creating a feature branch for just these extra tests. Git branches are designed to be lightweight, and where necessary, transient, and use of branches for even small bits of work is encouraged.

Later on, once we’ve finished writing these tests and are convinced they work properly, we’ll merge our test-suite branch back into develop.

Inflammation Data Analysis