Content from Introduction to Raster Data

Last updated on 2025-02-25 | Edit this page

Overview

Questions

- What format should I use to represent my data?

- What are the main data types used for representing geospatial data?

- What are the main attributes of raster data?

Objectives

- Describe the difference between raster and vector data.

- Describe the strengths and weaknesses of storing data in raster format.

- Distinguish between continuous and categorical raster data and identify types of datasets that would be stored in each format.

Introduction

This episode introduces the two primary types of data models that are used to digitally represent the Earth surface: raster and vector. After briefly introducing these data models, this episode focuses on the raster representation, describing some major features and types of raster data. This workshop will focus on how to work with both raster and vector data sets, therefore it is essential that we understand the basic structures of these types of data and the types of phenomena that they can represent.

Data Structures: Raster and Vector

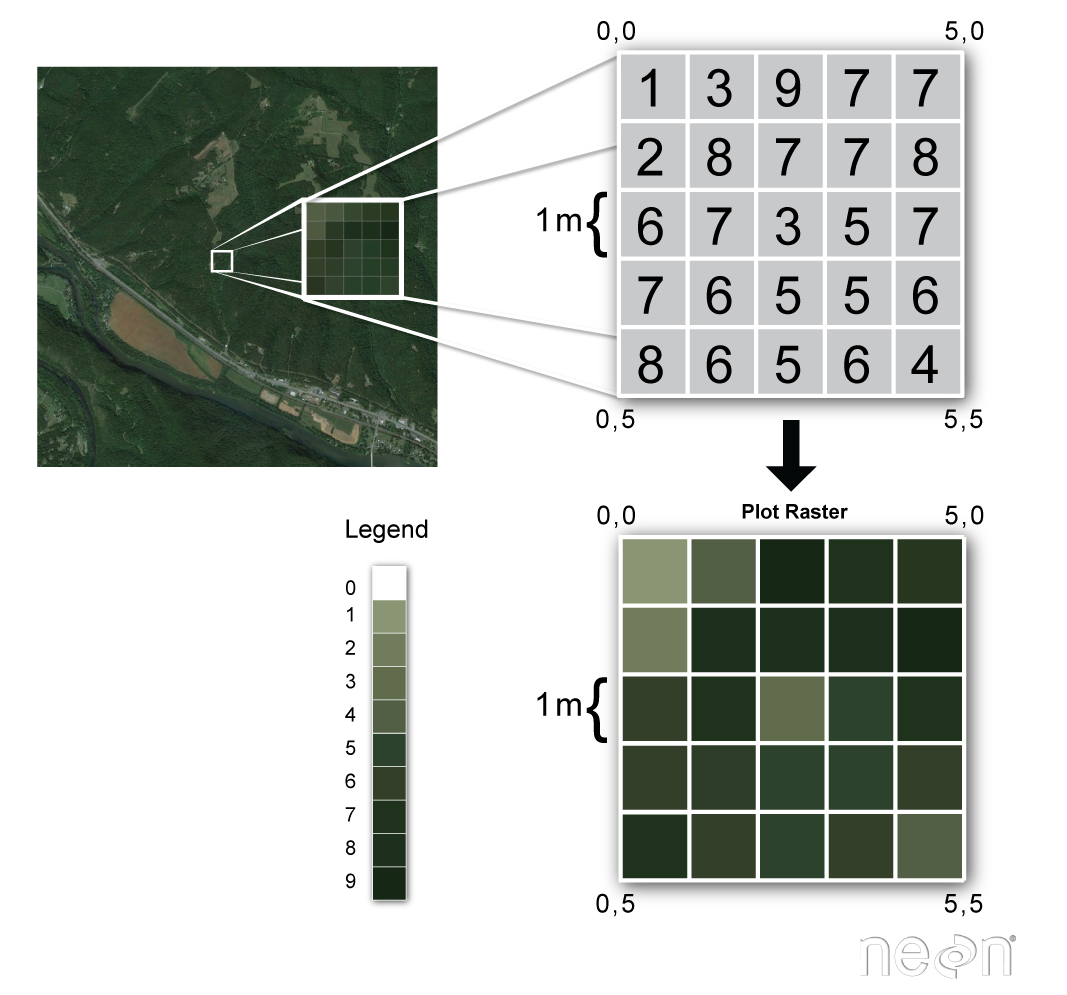

The two primary data models that are used to represent the Earth surface digitally are the raster and vector. Raster data is stored as a grid of values which are rendered on a map as pixels (also known as cells) where each pixel (or cell) represents a value of the Earth surface. Examples of raster data are satellite images or aerial photographs. Data stored according to the vector data model are represented by points, lines, or polygons. Examples of vector representation are points of interest, buildings (often represented as building footprints) or roads.

Representing phenomena as vector data allows you to add attribute information to them. For instance, a polygon of a house can contain multiple attributes containing information about the address like the street name, zip code, city, and number. More explanations about vector data will be discussed in the next episode.

When working with spatial information, you will experience that many phenomena can be represented as vector data and raster data. A house, for instance, can be represented by a set of cells in a raster having all the same value or by a polygon as vector containing attribute information (figure 1). It depends on the purpose for which the data is collected and intended to be used which data model it is stored in. But as a rule of thumb, you can apply that discrete phenomena like buildings, roads, trees, signs are represented as vector data, whereas continuous phenomena like temperature, wind speed, elevation are represented as raster data. Yet, one of the things a spatial data analyst often has to do is to transform data from vector to raster or the other way around. Keep in mind that this can cause problems in the data quality.

Raster Data

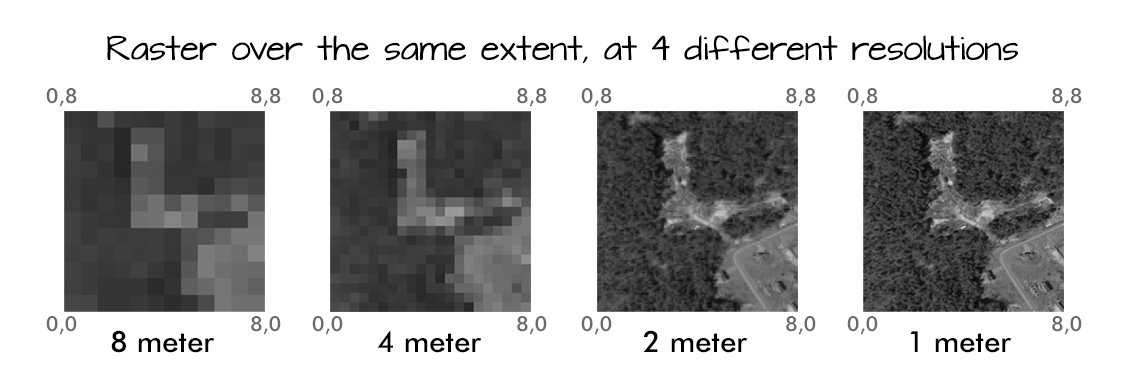

Raster data is any pixelated (or gridded) data where each pixel has a value and is associated with a specific geographic location. The value of a pixel can be continuous (e.g., elevation, temperature) or categorical (e.g., land-use type). If this sounds familiar, it is because this data structure is very common: it’s how we represent any digital image. A geospatial raster is only different from a digital photo in that it is accompanied by spatial information that connects the data to a particular location. This includes the raster’s extent and cell size, the number of rows and columns, and its Coordinate Reference System (CRS), which will be explained in episode 3 of this workshop.

Some examples of continuous rasters include:

- Precipitation maps.

- Elevation maps.

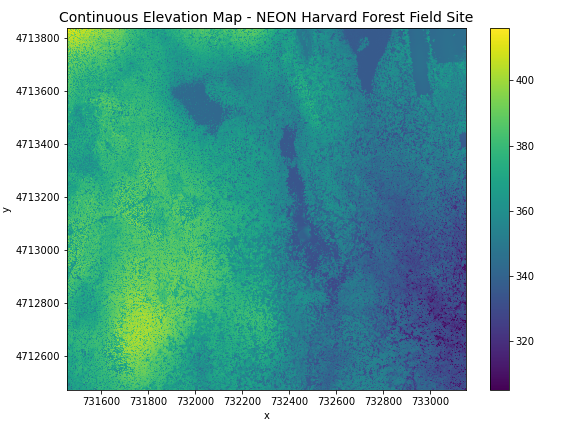

A map of elevation for Harvard Forest derived from the NEON AOP LiDAR sensor is below. Elevation is represented as a continuous numeric variable in this map. The legend shows the continuous range of values in the data from around 300 to 420 meters.

Some rasters contain categorical data where each pixel represents a discrete class such as a landcover type (e.g., “forest” or “grassland”) rather than a continuous value such as elevation or temperature. Some examples of classified maps include:

- Landcover / land-use maps.

- Elevation maps classified as low, medium, and high elevation.

The map above shows the contiguous United States with landcover as categorical data. Each color is a different landcover category. (Source: Homer, C.G., et al., 2015, Completion of the 2011 National Land Cover Database for the conterminous United States-Representing a decade of land cover change information. Photogrammetric Engineering and Remote Sensing, v. 81, no. 5, p. 345-354)

Advantages and Disadvantages

With your neighbor, brainstorm potential advantages and disadvantages of storing data in raster format. Add your ideas to the Etherpad. The Instructor will discuss and add any points that weren’t brought up in the small group discussions.

Raster data has some important advantages:

- representation of continuous surfaces

- potentially very high levels of detail

- data is ‘unweighted’ across its extent - the geometry doesn’t implicitly highlight features

- cell-by-cell calculations can be very fast and efficient

The downsides of raster data are:

- very large file sizes as cell size gets smaller

- currently popular formats don’t embed metadata well (more on this later!)

- can be difficult to represent complex information

Important Attributes of Raster Data

Extent

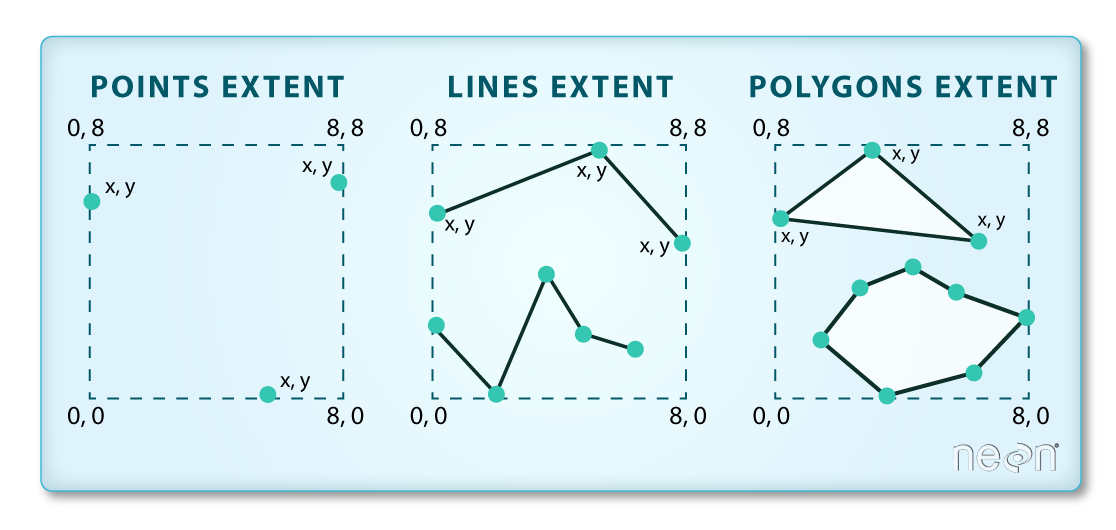

The spatial extent is the geographic area that the raster data covers. The spatial extent of an object represents the geographic edge or location that is the furthest north, south, east and west. In other words, extent represents the overall geographic coverage of the spatial object.

Extent Challenge

In the image above, the dashed boxes around each set of objects seems to imply that the three objects have the same extent. Is this accurate? If not, which object(s) have a different extent?

The lines and polygon objects have the same extent. The extent for the points object is smaller in the vertical direction than the other two because there are no points on the line at y = 8.

Raster Data Format for this Workshop

Raster data can come in many different formats. For this workshop, we

will use one of the most common formats for raster data, i.e. the

GeoTIFF format, which has the extension .tif. A

.tif file stores metadata or attributes about the file as

embedded tif tags. For instance, your camera might store a

tag that describes the make and model of the camera or the date the

photo was taken when it saves a .tif. A GeoTIFF is a

standard .tif image format with additional spatial

(georeferencing) information embedded in the file as tags. These tags

include the following raster metadata:

- Extent

- Resolution

- Coordinate Reference System (CRS) - we will introduce this concept in a later episode

- Values that represent missing data (

NoDataValue) - we will introduce this concept in a later episode.

We will discuss these attributes in more detail in a later episode. In that episode, we will also learn how to use Python to extract raster attributes from a GeoTIFF file.

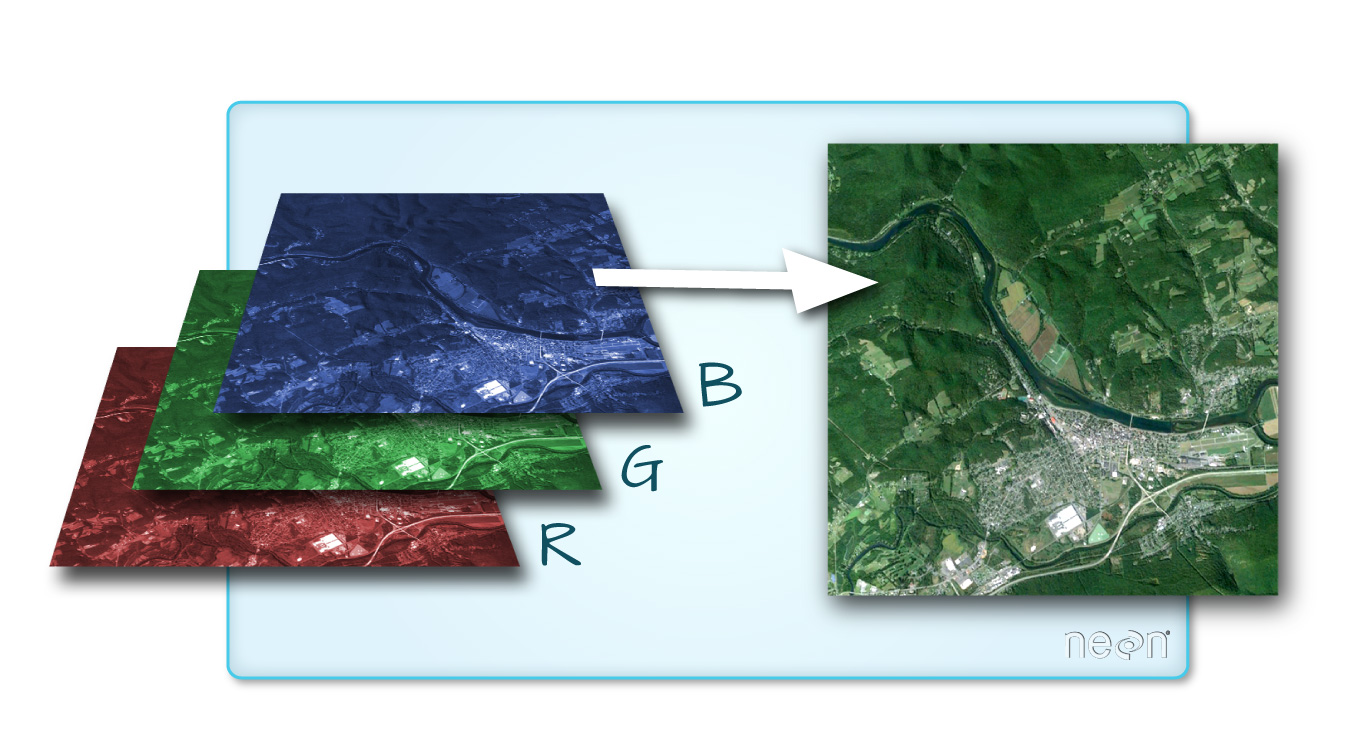

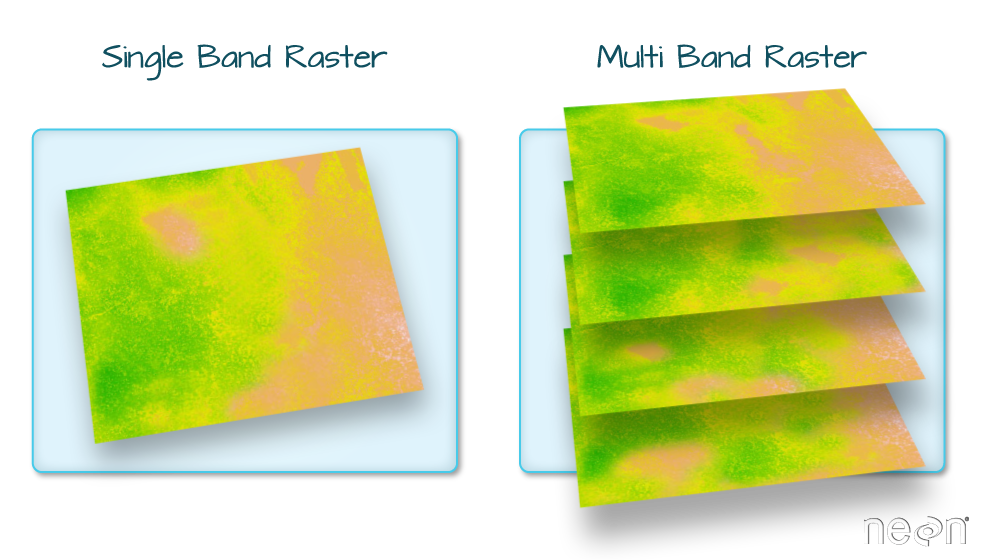

Multi-band Raster Data

A raster can contain one or more bands. One type of multi-band raster dataset that is familiar to many of us is a color image. A basic color image often consists of three bands: red, green, and blue (RGB). Each band represents light reflected from the red, green or blue portions of the electromagnetic spectrum. The pixel brightness for each band, when composited creates the colors that we see in an image.

We can plot each band of a multi-band image individually.

Or we can composite all three bands together to make a color image.

In a multi-band dataset, the rasters will always have the same extent, resolution, and CRS.

Other Types of Multi-band Raster Data

Multi-band raster data might also contain: 1. Time series: the same variable, over the same area, over time. 2. Multi or hyperspectral imagery: image rasters that have 4 or more (multi-spectral) or more than 10-15 (hyperspectral) bands. We won’t be working with this type of data in this workshop, but you can check out the NEON Data Skills Imaging Spectroscopy HDF5 in R tutorial if you’re interested in working with hyperspectral data cubes.

- Raster data is pixelated data where each pixel is associated with a specific location.

- Raster data always has an extent and a resolution.

- The extent is the geographical area covered by a raster.

- The resolution is the area covered by each pixel of a raster.

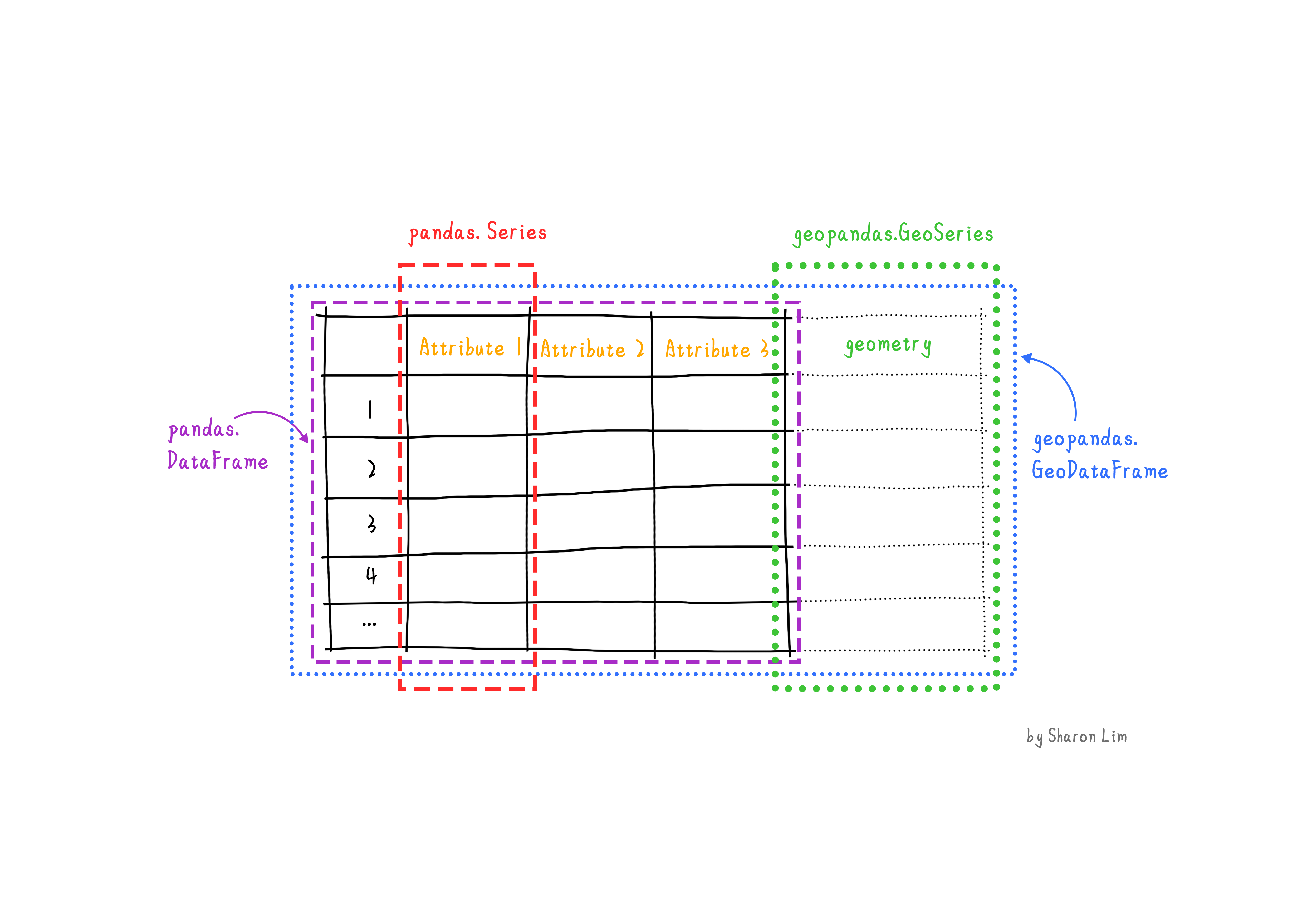

Content from Introduction to Vector Data

Last updated on 2025-02-25 | Edit this page

Overview

Questions

- What are the main attributes of vector data?

Objectives

- Describe the strengths and weaknesses of storing data in vector format.

- Describe the three types of vectors and identify types of data that would be stored in each.

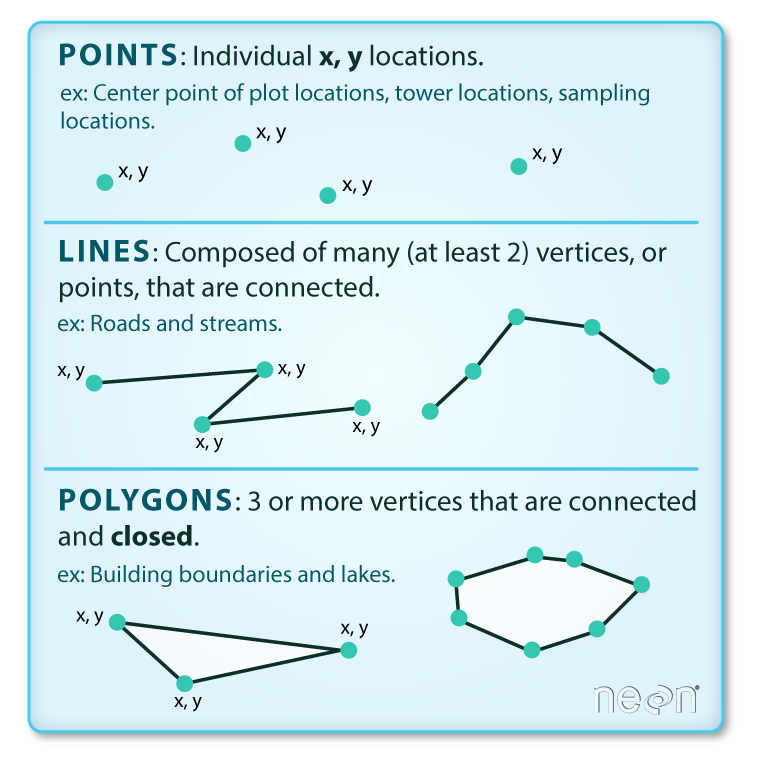

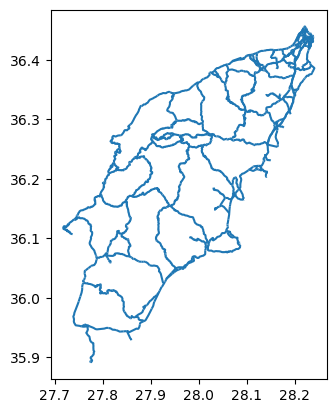

About Vector Data

Vector data structures represent specific features on the Earth’s surface, and assign attributes to those features. Vectors are composed of discrete geometric locations (x, y values) known as vertices that define the shape of the spatial object. The organization of the vertices determines the type of vector that we are working with: point, line or polygon.

Points: Each point is defined by a single x, y coordinate. There can be many points in a vector point file. Examples of point data include: sampling locations, the location of individual trees, or the location of survey plots.

Lines: Lines are composed of many (at least 2) points that are connected. For instance, a road or a stream may be represented by a line. This line is composed of a series of segments, each “bend” in the road or stream represents a vertex that has a defined x, y location.

Polygons: A polygon consists of 3 or more vertices that are connected and closed. The outlines of survey plot boundaries, lakes, oceans, and states or countries are often represented by polygons. Note, that polygons can also contain one or multiple holes, for instance a plot boundary with a lake in it. These polygons are considered complex or donut polygons.

Data Tip

Sometimes, boundary layers such as states and countries, are stored as lines rather than polygons. However, these boundaries, when represented as a line, will not create a closed object with a defined area that can be filled.

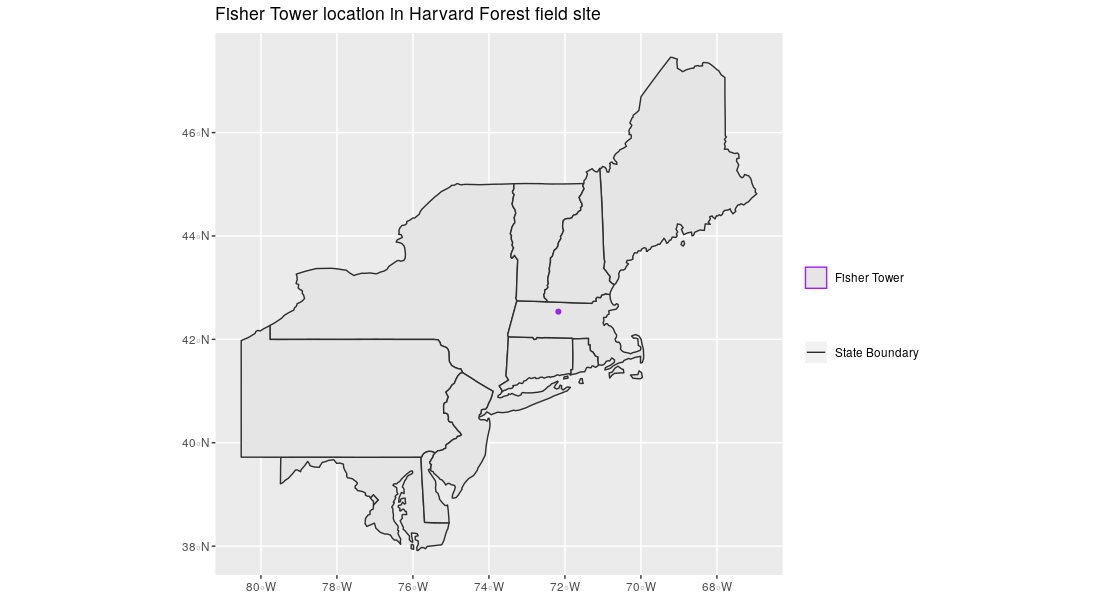

Identify Vector Types

The plot below includes examples of two of the three types of vector objects. Use the definitions above to identify which features are represented by which vector type.

State boundaries are shown as polygons. The Fisher Tower location is represented by a purple point. There are no line features shown. Note, that at a different scale the Fischer Tower coudl also have been represented as a polygon. Keep in mind that the purpose for which the dataset is created and aimed to be used for determines which vector type it uses.

Vector data has some important advantages:

- The geometry itself contains information about what the dataset creator thought was important

- The geometry structures hold information in themselves - why choose point over polygon, for instance?

- Each geometry feature can carry multiple attributes instead of just one, e.g. a database of cities can have attributes for name, country, population, etc

- Data storage can, depending on the scale, be very efficient compared to rasters

- When working with network analysis, for instance to calculate the shortest route between A and B, topologically correct lines are essential. This is not possible through raster data.

The downsides of vector data include:

- Potential bias in datasets - what didn’t get recorded? Often vector data are interpreted datasets like topographical maps and have been collected by someone else, for another purpose.

- Calculations involving multiple vector layers need to do math on the geometry as well as the attributes, which potentially can be slow compared to raster calculations.

Vector datasets are in use in many industries besides geospatial fields. For instance, computer graphics are largely vector-based, although the data structures in use tend to join points using arcs and complex curves rather than straight lines. Computer-aided design (CAD) is also vector- based. The difference is that geospatial datasets are accompanied by information tying their features to real-world locations.

Vector Data Format for this Workshop

Like raster data, vector data can also come in many different formats. For this workshop, we will use the GeoPackage format. GeoPackage is developed by the Open Geospatial Consortium and is is an open, standards-based, platform-independent, portable, self-describing, compact format for transferring geospatial information (source: https://www.geopackage.org/). A GeoPackage file, with extension .gpkg, is a single file that contains the geometries of features, their attributes and information about the coordinate reference system (CRS) used.

Another vector format that you will probably come accross quite often

is a Shapefile. Although we will not be using this format in this lesson

we do believe it is useful to understand how the Shapefile format works.

Shapefile is a multi-file format, with each shapefile consisting of

multiple files in the same directory, of which .shp,

.shx, and .dbf files are mandatory. Other

non-mandatory but very important files are .prj and

shp.xml files.

- The

.shpfile stores the feature geometry itself -

.shxis a positional index of the feature geometry to allow quickly searching forwards and backwards the geographic coordinates of each vertex in the vector -

.dbfcontains the tabular attributes for each shape. -

.prjfile indicates the Coordinate reference system (CRS) -

.shp.xmlcontains the Shapefile metadata.

Together, the Shapefile includes the following information:

- Extent - the spatial extent of the shapefile (i.e. geographic area that the shapefile covers). The spatial extent for a shapefile represents the combined extent for all spatial objects in the shapefile.

- Object type - whether the shapefile includes points, lines, or polygons.

- Coordinate reference system (CRS)

- Other attributes - for example, a line shapefile that contains the locations of streams, might contain the name of each stream.

Because the structure of points, lines, and polygons are different, each individual shapefile can only contain one vector type (all points, all lines or all polygons). You will not find a mixture of point, line and polygon objects in a single shapefile.

More Resources on Shapefiles

More about shapefiles can be found on Wikipedia. Shapefiles are often publicly available from government services, such as this page containing all administrative boundaries for countries in the world or topographical vector data from Open Street Maps.

Why not both?

Very few formats can contain both raster and vector data - in fact, most are even more restrictive than that. Vector datasets are usually locked to one geometry type, e.g. points only. Raster datasets can usually only encode one data type, for example you can’t have a multiband GeoTIFF where one layer is integer data and another is floating-point. There are sound reasons for this - format standards are easier to define and maintain, and so is metadata. The effects of particular data manipulations are more predictable if you are confident that all of your input data has the same characteristics.

- Vector data structures represent specific features on the Earth’s surface along with attributes of those features.

- Vector data is often interpreted data and collected for a different purpose than you would want to use it for.

- Vector objects are either points, lines, or polygons.

Content from Coordinate Reference Systems

Last updated on 2025-02-25 | Edit this page

Overview

Questions

- What is a coordinate reference system and how do I interpret one?

Objectives

- Name some common schemes for describing coordinate reference systems.

- Interpret a PROJ4 coordinate reference system description.

Coordinate Reference Systems

A data structure cannot be considered geospatial unless it is accompanied by coordinate reference system (CRS) information, in a format that geospatial applications can use to display and manipulate the data correctly. CRS information connects data to the Earth’s surface using a mathematical model.

CRS vs SRS

CRS (coordinate reference system) and SRS (spatial reference system) are synonyms and are commonly interchanged. We will use only CRS throughout this workshop.

The CRS associated with a dataset tells your mapping software where the raster is located in geographic space. It also tells the mapping software what method should be used to flatten or project the raster in geographic space.

The image below (figure 3.1) shows maps of the United States in different projections. Notice the differences in shape associated with each projection. These differences are a direct result of the calculations used to flatten the data onto a 2-dimensional map.

There are lots of great resources that describe coordinate reference systems and projections in greater detail. For the purposes of this workshop, what is important to understand is that data from the same location but saved in different projections will not line up. Thus, it is important when working with spatial data to identify the coordinate reference system applied to the data and retain it throughout data processing and analysis.

Components of a CRS

CRS information has three components:

Datum: A model of the shape of the earth. It has angular units (i.e. degrees) and defines the starting point (i.e. where is [0,0]?) so the angles reference a meaningful spot on the earth. Common global datums are WGS84 and NAD83. Datums can also be local - fit to a particular area of the globe, but ill-fitting outside the area of intended use. For instance local cadastre, land registry and mapping agencies require a high quality for their datasets, which can be obtained using a local system. In this lesson, we will use the WGS84 datum. The datum is often also refered to as the Geographical Coordinate System.

Projection: A mathematical transformation of the angular measurements on a round earth to a flat surface (i.e. paper or a computer screen). The units associated with a given projection are usually linear (feet, meters, etc.). In this workshop, we will see data in two different projections. Note that the projection is also often referred to as Projected Coordinate System.

Additional Parameters: Additional parameters are often necessary to create the full coordinate reference system. One common additional parameter is a definition of the center of the map. The number of required additional parameters depends on what is needed by each specific projection.

Orange Peel Analogy

A common analogy employed to teach projections is the orange peel analogy. If you imagine that the Earth is an orange, how you peel it and then flatten the peel is similar to how projections get made.

- A datum is the choice of fruit to use. Is the Earth an orange, a lemon, a lime, a grapefruit?

A projection is how you peel your orange and then flatten the peel.

- An additional parameter could include a definition of the location of the stem of the fruit. What other parameters could be included in this analogy?

Which projection should I use?

A well know projection is the Mercator projection introduced by the Flemisch cartographer Gerardus Mercator in the 16th Century. This is a so-called cilindrical projection, meaning that a virtual cilinder is placed around the globe to flatten it. This type of projections are relatively accurate near to the equator, but towards the poles blows things up (more info on cylindrical projections here. The main advantage of the Mercator projection is that it is very suitable for navigation purposes since it always shows North as up and South and as down - in the 17th century this projection was essential for sailors to navigate the oceans.

To decide if a projection is right for your data, answer these questions:

- What is the area of minimal distortion?

- What aspect of the data does it preserve?

Peter Dana from the University of Colorado at Boulder and the Department of Geo-Information Processing have a good discussion of these aspects of projections. Online tools like Projection Wizard can also help you discover projections that might be a good fit for your data.

Data Tip

Take the time to identify a projection that is suited for your project. You don’t have to stick to the ones that are popular.

Describing Coordinate Reference Systems

There are several common systems in use for storing and transmitting CRS information, as well as translating among different CRSs. These systems generally comply with ISO 19111. Common systems for describing CRSs include EPSG, OGC WKT, and PROJ strings.

EPSG

The EPSG system is a database of CRS information maintained by the International Association of Oil and Gas Producers. The dataset contains both CRS definitions and information on how to safely convert data from one CRS to another. Using EPSG is easy as every CRS has an integer identifier, e.g. WGS84 is EPSG:4326. The downside is that you can only use the CRSs defined by EPSG and cannot customise them (some datasets do not have EPSG codes). epsg.io is an excellent website for finding suitable projections by location or for finding information about a particular EPSG code.

Well-Known Text

The Open Geospatial Consortium WKT standard is used by a number of important geospatial apps and software libraries. WKT is a nested list of geodetic parameters. The structure of the information is defined on their website. WKT is valuable in that the CRS information is more transparent than in EPSG, but can be more difficult to read and compare than PROJ since it is meant to necessarily represent more complex CRS information. Additionally, the WKT standard is implemented inconsistently across various software platforms, and the spec itself has some known issues.

PROJ

PROJ is an open-source library for storing, representing and transforming CRS information. PROJ strings continue to be used, but the format is deprecated by the PROJ C maintainers due to inaccuracies when converting to the WKT format. The data and python libraries we will be working with in this workshop use different underlying representations of CRSs under the hood for reprojecting. CRS information can still be represented with EPSG, WKT, or PROJ strings without consequence, but it is best to only use PROJ strings as a format for viewing CRS information, not for reprojecting data.

PROJ represents CRS information as a text string of key-value pairs, which makes it easy to read and interpret.

A PROJ4 string includes the following information:

- proj: the projection of the data

- zone: the zone of the data (this is specific to the UTM projection)

- datum: the datum used

- units: the units for the coordinates of the data

- ellps: the ellipsoid (how the earth’s roundness is calculated) for the data

Note that the zone is unique to the UTM projection. Not all CRSs will have a zone.

Reading a PROJ4 String

Here is a PROJ4 string for one of the datasets we will use in this workshop:

+proj=utm +zone=18 +datum=WGS84 +units=m +no_defs +ellps=WGS84 +towgs84=0,0,0

- What projection, zone, datum, and ellipsoid are used for this data?

- What are the units of the data?

- Using the map above, what part of the United States was this data collected from?

- Projection is UTM, zone 18, datum is WGS84, ellipsoid is WGS84.

- The data is in meters.

- The data comes from the eastern US seaboard.

Format interoperability

Many existing file formats were invented by GIS software developers, often in a closed-source environment. This led to the large number of formats on offer today, and considerable problems transferring data between software environments. The Geospatial Data Abstraction Library (GDAL) is an open-source answer to this issue.

GDAL is a set of software tools that translate between almost any geospatial format in common use today (and some not so common ones). GDAL also contains tools for editing and manipulating both raster and vector files, including reprojecting data to different CRSs. GDAL can be used as a standalone command-line tool, or built in to other GIS software. Several open-source GIS programs use GDAL for all file import/export operations.

Metadata

Spatial data is useless without metadata. Essential metadata includes the CRS information, but proper spatial metadata encompasses more than that. History and provenance of a dataset (how it was made), who is in charge of maintaining it, and appropriate (and inappropriate!) use cases should also be documented in metadata. This information should accompany a spatial dataset wherever it goes. In practice this can be difficult, as many spatial data formats don’t have a built-in place to hold this kind of information. Metadata often has to be stored in a companion file, and generated and maintained manually.

More Resources on CRS

- spatialreference.org - A comprehensive online library of CRS information.

- QGIS Documentation - CRS Overview.

- Choosing the Right Map Projection.

- Video highlighting how map projections can make continents seems proportionally larger or smaller than they actually are.

- The True size An intuitive webmap that allows you to compare the actual size of countries by dragging them to another place in the webmercator projection.

- All geospatial datasets (raster and vector) are associated with a specific coordinate reference system.

- A coordinate reference system includes datum, projection, and additional parameters specific to the dataset.

- All maps are distorted because of the projection.

Content from The Geospatial Landscape

Last updated on 2025-02-25 | Edit this page

Overview

Questions

- What programs and applications are available for working with geospatial data?

Objectives

- Describe the difference between various approaches to geospatial computing, and their relative strengths and weaknesses.

- Name some commonly used GIS applications.

- Name some commonly used Python packages that can access and process spatial data.

- Describe pros and cons for working with geospatial data using a command-line versus a graphical user interface.

Standalone Software Packages

Most traditional GIS work is carried out in standalone applications that aim to provide end-to-end geospatial solutions. These applications are available under a wide range of licenses and price points. Some of the most common are listed below.

Open-source software

The Open Source Geospatial Foundation (OSGEO) supports several actively managed GIS platforms:

- QGIS is a professional GIS application that is built on top of and proud to be itself Free and Open Source Software (FOSS). QGIS is written in Python and C++, has a python console interface, allows to develop plugins and has several interfaces written in R including RQGIS.

- GRASS GIS, commonly referred to as GRASS (Geographic Resources Analysis Support System), is a FOSS-GIS software suite used for geospatial data management and analysis, image processing, graphics and maps production, spatial modeling, and visualization. GRASS GIS is currently used in academic and commercial settings around the world, as well as by many governmental agencies and environmental consulting companies. It is a founding member of the Open Source Geospatial Foundation (OSGeo). GRASS GIS can be installed along with and made accessible within QGIS 3.

- GDAL is a multiplatform set of tools for translating between geospatial data formats. It can also handle reprojection and a variety of geoprocessing tasks. GDAL is built in to many applications both FOSS and commercial, including GRASS and QGIS.

- SAGA-GIS, or System for Automated Geoscientific Analyses, is a FOSS-GIS application developed by a small team of researchers from the Dept. of Physical Geography, Göttingen, and the Dept. of Physical Geography, Hamburg. SAGA has been designed for an easy and effective implementation of spatial algorithms, offers a comprehensive, growing set of geoscientific methods, provides an easily approachable user interface with many visualisation options, and runs under Windows and Linux operating systems. Like GRASS GIS, it can also be installed and made accessible in QGIS3.

- PostGIS is a geospatial extension to the PostGreSQL relational database and is especially suited to work with large vector datasets.

- GeoDMS is a powerful Open Source GIS software which allows for fast calculations and calculations with large datasets. Furthermore it allows for complex scenario analyses.

Commercial software

- ESRI (Environmental Systems Research Institute) is an international supplier of geographic information system (GIS) software, web GIS and geodatabase management applications. ESRI provides several licenced platforms for performing GIS, including ArcGIS, ArcGIS Online, and Portal for ArcGIS a standalone version of ArGIS Online which you host locally. ESRI welcomes development on their platforms through their DevLabs. ArcGIS software can be installed using Chef Cookbooks from Github. In addition, ESRI offers the ArcPy Python library as part of an ArcGIS Pro licence, allowing to translate operations in the ArcGIS Pro GUI to Python scripts.

- Pitney Bowes produce MapInfo Professional, which was one of the earliest desktop GIS programs on the market.

- Hexagon Geospatial Power Portfolio includes many geospatial tools including ERDAS Imagine, powerful software for remote sensing.

- Manifold is a desktop GIS that emphasizes speed through the use of parallel and GPU processing.

Online + Cloud computing

- PANGEO is a community organization dedicated to open and reproducible data science with python. They focus on the Pangeo software ecosystem for working with big data in the geosciences.

- Google developed Google Earth Engine which combines a multi-petabyte catalog of satellite imagery and geospatial datasets with planetary-scale analysis capabilities and makes it available for scientists, researchers, and developers to detect changes, map trends, and quantify differences on the Earth’s surface. Earth Engine API runs in both Python and JavaScript.

- ArcGIS Online provides access to thousands of maps and base layers.

Private companies have released SDK platforms for large scale GIS analysis:

- Kepler.gl is Uber’s toolkit for handling large datasets (i.e. Uber’s data archive).

- Boundless Geospatial is built upon OSGEO software for enterprise solutions.

Publicly funded open-source platforms for large scale GIS analysis:

- PANGEO for the Earth Sciences. This community organization also supports python libraries like xarray, iris, dask, jupyter, and many other packages.

- Sepal.io by FAO Open Foris utilizing EOS satellite imagery and cloud resources for global forest monitoring.

GUI vs CLI

The earliest computer systems operated without a graphical user interface (GUI), relying only on the command-line interface (CLI). Since mapping and spatial analysis are strongly visual tasks, GIS applications benefited greatly from the emergence of GUIs and quickly came to rely heavily on them. Most modern GIS applications have very complex GUIs, with all common tools and procedures accessed via buttons and menus.

Benefits of using a GUI include:

- Tools are all laid out in front of you

- Complex commands are easy to build

- Don’t need to learn a coding language

- Cartography and visualisation is more intuitive and flexible

Downsides of using a GUI include:

- Low reproducibility - you can record your actions and replay, but this is limited to the functionalities of the software

- Batch-processing is possible, but limited to the funstionalities of the software and hard to be integrated with other workflows

- Limited ability to customise functions or write your own

- Intimidating interface for new users - so many buttons!

In scientific computing, the lack of reproducibility in point-and-click software has come to be viewed as a critical weakness. As such, scripted CLI-style workflows are becoming popular, which leads us to another approach to doing GIS — via a programming language. Therefore this is the approach we will be using throughout this workshop.

GIS in programming languages

A number of powerful geospatial processing libraries exist for general-purpose programming languages like Java and C++. However, the learning curve for these languages is steep and the effort required is excessive for users who only need a subset of their functionality.

Higher-level scripting languages like Python and R are considered

easier to learn and use. Both now have their own packages that wrap up

those geospatial processing libraries and make them easy to access and

use safely. A key example is the Java Topology Suite (JTS), which is

implemented in C++ as GEOS. GEOS is accessible in Python via the

shapely package (and geopandas, which makes

use of shapely) and in R via sf. R and Python

also have interface packages for GDAL, and for specific GIS apps.

This last point is a huge advantage for GIS-by-programming; these interface packages give you the ability to access functions unique to particular programs, but have your entire workflow recorded in a central document - a document that can be re-run at will. Below are lists of some of the key spatial packages for Python, which we will be using in the remainder of this workshop.

-

geopandasandgeocubefor working with vector data -

rasterioandrioxarrayfor working with raster data

These packages along with the matplotlib package are all

we need for spatial data visualisation. Python also has many fundamental

scientific packages that are relevant in the geospatial domain. Below is

a list of particularly fundamental packages. numpy,

scipy, and scikit-image are all excellent

options for working with rasters, as arrays.

An overview of these and other Python spatial packages can be accessed here.

As a programming language, Python can be a CLI tool. However, using Python together with an Integrated Development Environment (IDE) application allows some GUI features to become part of your workflow. IDEs allow the best of both worlds. They provide a place to visually examine data and other software objects, interact with your file system, and draw plots and maps, but your activities are still command-driven: recordable and reproducible. There are several IDEs available for Python. JupyterLab is well-developed and the most widely used option for data science in Python. VSCode and Spyder are other popular options for data science.

Traditional GIS apps are also moving back towards providing a scripting environment for users, further blurring the CLI/GUI divide. ESRI have adopted Python into their software by introducing ArcPy, and QGIS is both Python and R-friendly.

GIS File Types

There are a variety of file types that are used in GIS analysis. Depending on the program you choose to use some file types can be used while others are not readable. Below is a brief table describing some of the most common vector and raster file types.

Vector

| File Type | Extensions | Description |

|---|---|---|

| Esri Shapefile | .SHP .DBF .SHX | The most common geospatial file type. This has become the industry standard. The three required files are: SHP is the feature geometry. SHX is the shape index position. DBF is the attribute data. |

| GeoPackage | .gpkg | As an alternative to Shapefile, this open file format is gaining terrain and it consists of one file containing all necessary attribute information. |

| Geographic JavaScript Object Notation (GeoJSON) | .GEOJSON .JSON | Used for web-based mapping and uses JavaScript Object Notation to store the coordinates as text. |

| Google Keyhole Markup Language (KML) | .KML .KMZ | KML stands for Keyhole Markup Language. This GIS format is XML-based and is primarily used for Google Earth. |

| GPX or GPS Exchange Format | .gpx | XML schema designed as a common GPS data format for software applications. This format is often used for tracking activities e.g. hiking, cycling, running etc. |

| OpenStreetMap | .OSM | OSM files are the native file for OpenStreetMap which had become the largest crowdsourcing GIS data project in the world. These files are a collection of vector features from crowd-sourced contributions from the open community. |

Raster

| File Type | Extensions | Description |

|---|---|---|

| ERDAS Imagine | .IMG | ERDAS Imagine IMG files is a proprietary file format developed by Hexagon Geospatial. IMG files are commonly used for raster data to store single and multiple bands of satellite data.Each raster layer as part of an IMG file contains information about its data values. For example, this includes projection, statistics, attributes, pyramids and whether or not it’s a continuous or discrete type of raster. |

| GeoTIFF | .TIF .TIFF .OVR | The GeoTIFF has become an industry image standard file for GIS and satellite remote sensing applications. GeoTIFFs may be accompanied by other files:TFW is the world file that is required to give your raster geolocation.XML optionally accompany GeoTIFFs and are your metadata.AUX auxiliary files store projections and other information.OVR pyramid files improves performance for raster display. |

| Cloud Optimized GeoTIFF (COG) | .TIF .TIFF | Based on the GeoTIFF standard, COGs incorporate tiling and overviews to support HTTP range requests where users can query and load subsets of the image without having to transfer the entire file. |

- Many software packages exist for working with geospatial data.

- Command-line programs allow you to automate and reproduce your work.

- JupyterLab provides a user-friendly interface for working with Python.

Content from Access satellite imagery using Python

Last updated on 2025-02-26 | Edit this page

Overview

Questions

- Where can I find open-access satellite data?

- How do I search for satellite imagery with the STAC API?

- How do I fetch remote raster datasets using Python?

Objectives

- Search public STAC repositories of satellite imagery using Python.

- Inspect search result’s metadata.

- Download (a subset of) the assets available for a satellite scene.

- Open satellite imagery as raster data and save it to disk.

Considerations for the position of this episode in the workshop

When this workshop is taught to learners with limited prior knowledge of Python, it might be better to place this episode after episode 11 and before episode 12. This episode contains an introduction to working with APIs and dictionaries, which can be perceived as challenging by some learners. Another consideration for placing this episode later in the workshop is when it is taught to learners with prior GIS knowledge who want to perform GIS-like operations with data they have already collected or for learners interested in working with raster data but less interested in satellite images.

Introduction

A number of satellites take snapshots of the Earth’s surface from space. The images recorded by these remote sensors represent a very precious data source for any activity that involves monitoring changes on Earth. Satellite imagery is typically provided in the form of geospatial raster data, with the measurements in each grid cell (“pixel”) being associated to accurate geographic coordinate information.

In this episode we will explore how to access open satellite data using Python. In particular, we will consider the Sentinel-2 data collection that is hosted on Amazon Web Services (AWS). This dataset consists of multi-band optical images acquired by the constellation of two satellites from the Sentinel-2 mission and it is continuously updated with new images.

Search for satellite imagery

The SpatioTemporal Asset Catalog (STAC) specification

Current sensor resolutions and satellite revisit periods are such that terabytes of data products are added daily to the corresponding collections. Such datasets cannot be made accessible to users via full-catalog download. Therefore, space agencies and other data providers often offer access to their data catalogs through interactive Graphical User Interfaces (GUIs), see for instance the Copernicus Browser for the Sentinel missions. Accessing data via a GUI is a nice way to explore a catalog and get familiar with its content, but it represents a heavy and error-prone task that should be avoided if carried out systematically to retrieve data.

A service that offers programmatic access to the data enables users to reach the desired data in a more reliable, scalable and reproducible manner. An important element in the software interface exposed to the users, which is generally called the Application Programming Interface (API), is the use of standards. Standards, in fact, can significantly facilitate the reusability of tools and scripts across datasets and applications.

The SpatioTemporal Asset Catalog (STAC) specification is an emerging standard for describing geospatial data. By organizing metadata in a form that adheres to the STAC specifications, data providers make it possible for users to access data from different missions, instruments and collections using the same set of tools.

Search a STAC catalog

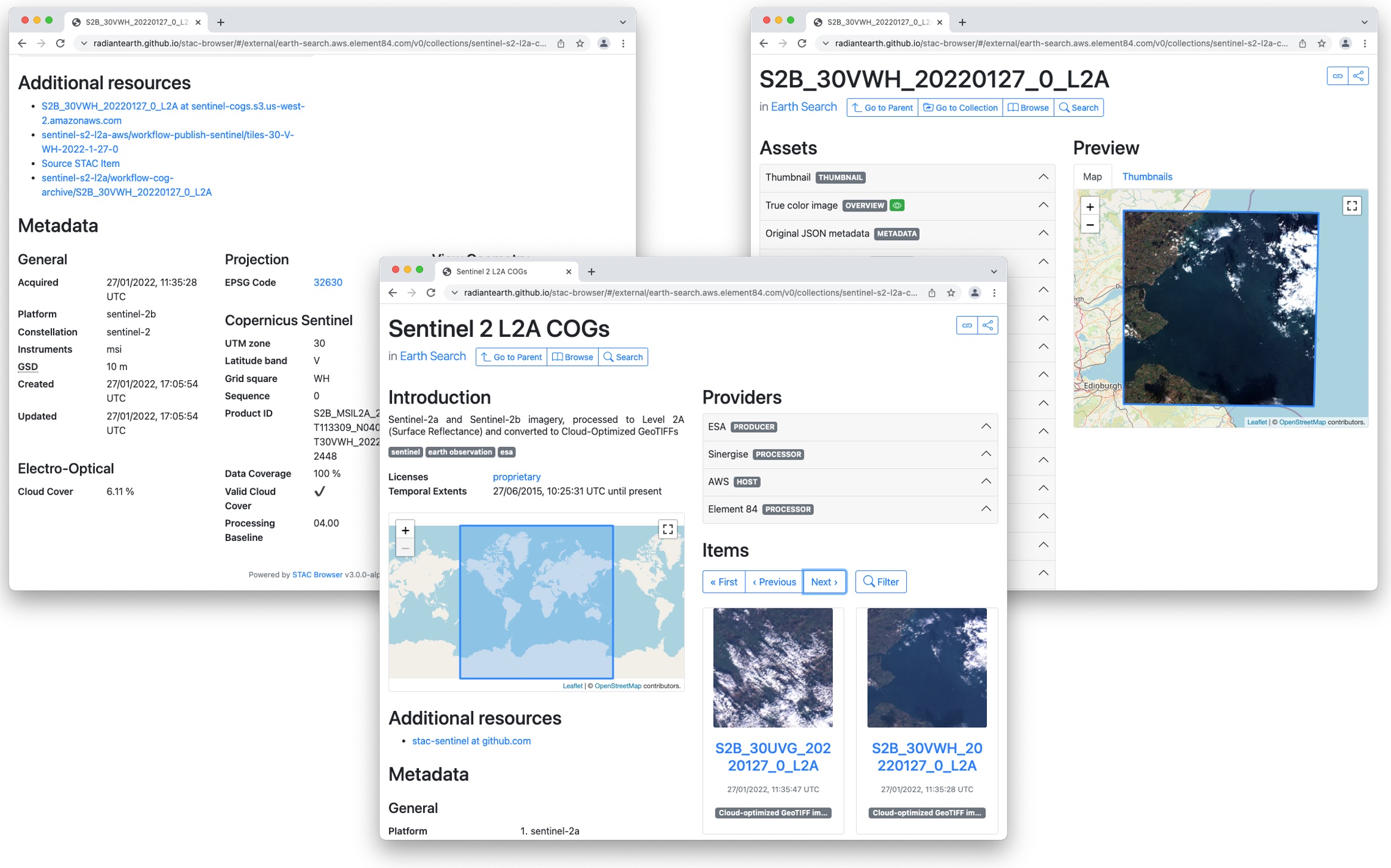

The STAC browser is a good starting point to discover available datasets, as it provides an up-to-date list of existing STAC catalogs. From the list, let’s click on the “Earth Search” catalog, i.e. the access point to search the archive of Sentinel-2 images hosted on AWS.

Exercise: Discover a STAC catalog

Let’s take a moment to explore the Earth Search STAC catalog, which is the catalog indexing the Sentinel-2 collection that is hosted on AWS. We can interactively browse this catalog using the STAC browser at this link.

- Open the link in your web browser. Which (sub-)catalogs are available?

- Open the Sentinel-2 Level 2A collection, and select one item from the list. Each item corresponds to a satellite “scene”, i.e. a portion of the footage recorded by the satellite at a given time. Have a look at the metadata fields and the list of assets. What kind of data do the assets represent?

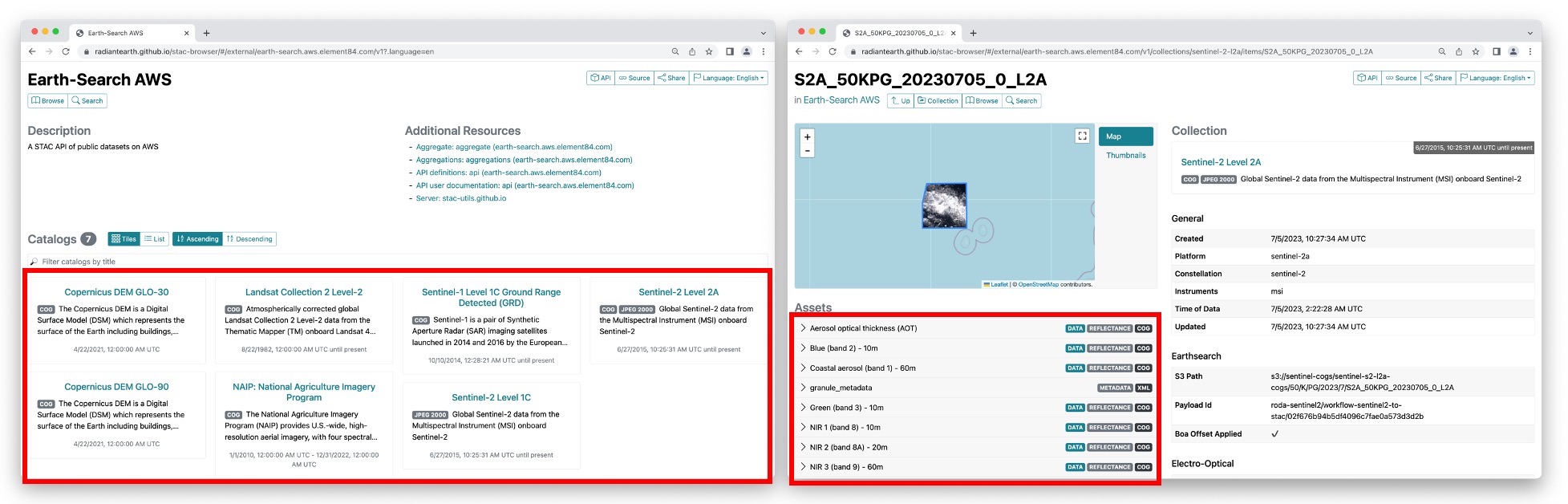

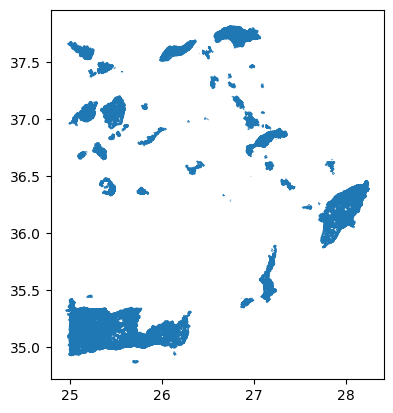

- 8 sub-catalogs are available. In the STAC nomenclature, these are actually “collections”, i.e. catalogs with additional information about the elements they list: spatial and temporal extents, license, providers, etc. Among the available collections, we have Landsat Collection 2, Level-2 and Sentinel-2 Level 2A (see left screenshot in the figure above).

- When you select the Sentinel-2 Level 2A collection, and randomly choose one of the items from the list, you should find yourself on a page similar to the right screenshot in the figure above. On the left side you will find a list of the available assets: overview images (thumbnail and true color images), metadata files and the “real” satellite images, one for each band captured by the Multispectral Instrument on board Sentinel-2.

When opening a catalog with the STAC browser, you can access the API URL by clicking on the “Source” button on the top right of the page. By using this URL, you have access to the catalog content and, if supported by the catalog, to the functionality of searching its items. For the Earth Search STAC catalog the API URL is:

You can query a STAC API endpoint from Python using the pystac_client

library. To do so we will first import Client from

pystac_client and use the method

open from the Client object:

For this episode we will focus at scenes belonging to the

sentinel-2-l2a collection. This dataset is useful for our

case and includes Sentinel-2 data products pre-processed at level 2A

(bottom-of-atmosphere reflectance).

In order to see which collections are available in the provided

api_url the get_collections

method can be used on the Client object.

To print the collections we can make a for loop doing:

OUTPUT

<CollectionClient id=cop-dem-glo-30>

<CollectionClient id=naip>

<CollectionClient id=sentinel-2-l2a>

<CollectionClient id=sentinel-2-l1c>

<CollectionClient id=cop-dem-glo-90>

<CollectionClient id=landsat-c2-l2>

<CollectionClient id=sentinel-1-grd>

<CollectionClient id=sentinel-2-c1-l2a>As said, we want to focus to the sentinel-2-l2a

collection. To do so, we set this collection into a variable:

The data in this collection is stored in the Cloud Optimized GeoTIFF (COG) format and as JPEG2000 images. In this episode we will focus at COGs, as these offer useful functionalities for our purpose.

Cloud Optimized GeoTIFFs

Cloud Optimized GeoTIFFs (COGs) are regular GeoTIFF files with some additional features that make them ideal to be employed in the context of cloud computing and other web-based services. This format builds on the widely-employed GeoTIFF format, already introduced in Episode 1: Introduction to Raster Data. In essence, COGs are regular GeoTIFF files with a special internal structure. One of the features of COGs is that data is organized in “blocks” that can be accessed remotely via independent HTTP requests. Data users can thus access the only blocks of a GeoTIFF that are relevant for their analysis, without having to download the full file. In addition, COGs typically include multiple lower-resolution versions of the original image, called “overviews”, which can also be accessed independently. By providing this “pyramidal” structure, users that are not interested in the details provided by a high-resolution raster can directly access the lower-resolution versions of the same image, significantly saving on the downloading time. More information on the COG format can be found here.

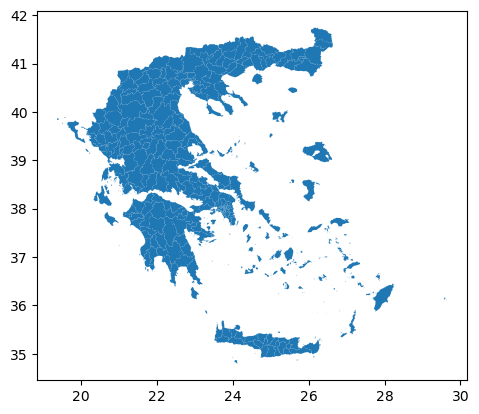

In order to get data for a specific location you can add longitude

latitude coordinates (World Geodetic System 1984 EPSG:4326) in your

request. In order to do so we are using the shapely library

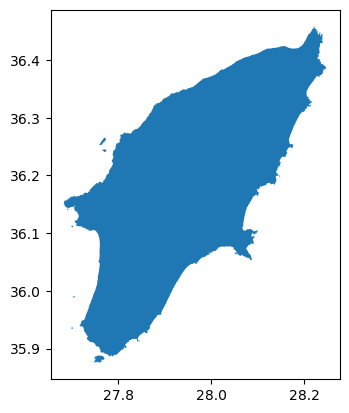

to define a geometrical point. Below we have included a point on the

island of Rhodes, which is the location of interest for our case study

(i.e. Longitude: 27.95 | Latitude 36.20).

PYTHON

from shapely.geometry import Point

point = Point(27.95, 36.20) # Coordinates of a point on RhodesNote: at this stage, we are only dealing with metadata, so no image

is going to be downloaded yet. But even metadata can be quite bulky if a

large number of scenes match our search! For this reason, we limit the

search by the intersection of the point (by setting the parameter

intersects) and assign the collection (by setting the

parameter collections). More information about the possible

parameters to be set can be found in the pystac_client

documentation for the Client’s

search method.

We now set up our search of satellite images in the following way:

Now we submit the query in order to find out how many scenes match our search criteria with the parameters assigned above (please note that this output can be different as more data is added to the catalog to when this episode was created):

OUTPUT

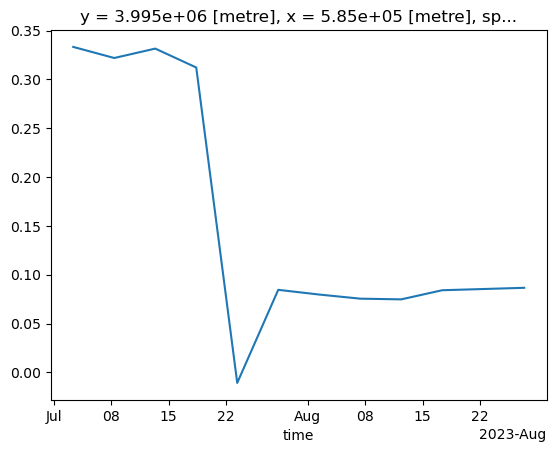

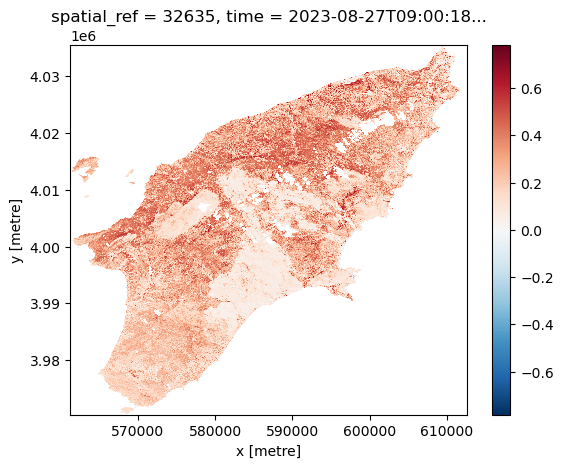

611You will notice that more than 500 scenes match our search criteria. We are however interested in the period right before and after the wildfire of Rhodes. In the following exercise you will therefore have to add a time filter to our search criteria to narrow down our search for images of that period.

Exercise: Search satellite scenes with a time filter

Search for all the available Sentinel-2 scenes in the

sentinel-2-c1-l2a collection that have been recorded

between 1st of July 2023 and 31st of August 2023 (few weeks before and

after the time in which the wildfire took place).

Hint: You can find the input argument and the required syntax in the

documentation of client.search (which you can access from

Python or online)

How many scenes are available?

Now that we have added a time filter, we retrieve the metadata of the

search results by calling the method item_collection:

The variable items is an ItemCollection

object. More information can be found at the pystac

documentation

Now let us check the size using len:

OUTPUT

12which is consistent with the number of scenes matching our search

results as found with search.matched(). We can iterate over

the returned items and print these to show their IDs:

OUTPUT

<Item id=S2A_35SNA_20230827_0_L2A>

<Item id=S2B_35SNA_20230822_0_L2A>

<Item id=S2A_35SNA_20230817_0_L2A>

<Item id=S2B_35SNA_20230812_0_L2A>

<Item id=S2A_35SNA_20230807_0_L2A>

<Item id=S2B_35SNA_20230802_0_L2A>

<Item id=S2A_35SNA_20230728_0_L2A>

<Item id=S2B_35SNA_20230723_0_L2A>

<Item id=S2A_35SNA_20230718_0_L2A>

<Item id=S2B_35SNA_20230713_0_L2A>

<Item id=S2A_35SNA_20230708_0_L2A>

<Item id=S2B_35SNA_20230703_0_L2A>Each of the items contains information about the scene geometry, its

acquisition time, and other metadata that can be accessed as a

dictionary from the properties attribute. To see which

information Item objects can contain you can have a look at the pystac

documentation.

Let us inspect the metadata associated with the first item of the search results. Let us first look at the collection date of the first item::

OUTPUT

2023-08-27 09:00:21.327000+00:00Let us now look at the geometry and other properties as well.

OUTPUT

{'type': 'Polygon', 'coordinates': [[[27.290401625602243, 37.04621863329741], [27.23303872472207, 36.83882218126937], [27.011145718480538, 36.05673246264742], [28.21878905911668, 36.05053734221328], [28.234426643135546, 37.04015200857309], [27.290401625602243, 37.04621863329741]]]}

{'created': '2023-08-27T18:15:43.106Z', 'platform': 'sentinel-2a', 'constellation': 'sentinel-2', 'instruments': ['msi'], 'eo:cloud_cover': 0.955362, 'proj:epsg': 32635, 'mgrs:utm_zone': 35, 'mgrs:latitude_band': 'S', 'mgrs:grid_square': 'NA', 'grid:code': 'MGRS-35SNA', 'view:sun_azimuth': 144.36354987218, 'view:sun_elevation': 59.06665363921, 's2:degraded_msi_data_percentage': 0.0126, 's2:nodata_pixel_percentage': 12.146327, 's2:saturated_defective_pixel_percentage': 0, 's2:dark_features_percentage': 0.249403, 's2:cloud_shadow_percentage': 0.237454, 's2:vegetation_percentage': 6.073786, 's2:not_vegetated_percentage': 18.026696, 's2:water_percentage': 74.259061, 's2:unclassified_percentage': 0.198216, 's2:medium_proba_clouds_percentage': 0.613614, 's2:high_proba_clouds_percentage': 0.341423, 's2:thin_cirrus_percentage': 0.000325, 's2:snow_ice_percentage': 2.3e-05, 's2:product_type': 'S2MSI2A', 's2:processing_baseline': '05.09', 's2:product_uri': 'S2A_MSIL2A_20230827T084601_N0509_R107_T35SNA_20230827T115803.SAFE', 's2:generation_time': '2023-08-27T11:58:03.000000Z', 's2:datatake_id': 'GS2A_20230827T084601_042718_N05.09', 's2:datatake_type': 'INS-NOBS', 's2:datastrip_id': 'S2A_OPER_MSI_L2A_DS_2APS_20230827T115803_S20230827T085947_N05.09', 's2:granule_id': 'S2A_OPER_MSI_L2A_TL_2APS_20230827T115803_A042718_T35SNA_N05.09', 's2:reflectance_conversion_factor': 0.978189079756816, 'datetime': '2023-08-27T09:00:21.327000Z', 's2:sequence': '0', 'earthsearch:s3_path': 's3://sentinel-cogs/sentinel-s2-l2a-cogs/35/S/NA/2023/8/S2A_35SNA_20230827_0_L2A', 'earthsearch:payload_id': 'roda-sentinel2/workflow-sentinel2-to-stac/af0287974aaa3fbb037c6a7632f72742', 'earthsearch:boa_offset_applied': True, 'processing:software': {'sentinel2-to-stac': '0.1.1'}, 'updated': '2023-08-27T18:15:43.106Z'}You can access items from the properties dictionary as

usual in Python. For instance, for the EPSG code of the projected

coordinate system:

Exercise: Search satellite scenes using metadata filters

Let’s add a filter on the cloud cover to select the only scenes with less than 1% cloud coverage. How many scenes do now match our search?

Hint: generic metadata filters can be implemented via the

query input argument of client.search, which

requires the following syntax (see docs):

query=['<property><operator><value>'].

Once we are happy with our search, we save the search results in a file:

This creates a file in GeoJSON format, which we can reuse here and in the next episodes. Note that this file contains the metadata of the files that meet out criteria. It does not include the data itself, only their metadata.

To load the saved search results as a ItemCollection we

can use pystac.ItemCollection.from_file().

Through this, we are instructing Python to use the

from_file method of the ItemCollection class

from the pystac library to load data from the specified

GeoJSON file:

The loaded item collection (items_loaded) is equivalent

to the one returned earlier by search.item_collection()

(items). You can thus perform the same actions on it: you

can check the number of items (len(items_loaded)), you can

loop over items (for item in items_loaded: ...), and you

can access individual elements using their index

(items_loaded[0]).

Access the assets

So far we have only discussed metadata - but how can one get to the

actual images of a satellite scene (the “assets” in the STAC

nomenclature)? These can be reached via links that are made available

through the item’s attribute assets. Let’s focus on the

last item in the collection: this is the oldest in time, and it thus

corresponds to an image taken before the wildfires.

OUTPUT

dict_keys(['aot', 'blue', 'coastal', 'granule_metadata', 'green', 'nir', 'nir08', 'nir09', 'red', 'rededge1', 'rededge2', 'rededge3', 'scl', 'swir16', 'swir22', 'thumbnail', 'tileinfo_metadata', 'visual', 'wvp', 'aot-jp2', 'blue-jp2', 'coastal-jp2', 'green-jp2', 'nir-jp2', 'nir08-jp2', 'nir09-jp2', 'red-jp2', 'rededge1-jp2', 'rededge2-jp2', 'rededge3-jp2', 'scl-jp2', 'swir16-jp2', 'swir22-jp2', 'visual-jp2', 'wvp-jp2'])We can print a minimal description of the available assets:

OUTPUT

aot: Aerosol optical thickness (AOT)

blue: Blue (band 2) - 10m

coastal: Coastal aerosol (band 1) - 60m

granule_metadata: None

green: Green (band 3) - 10m

nir: NIR 1 (band 8) - 10m

nir08: NIR 2 (band 8A) - 20m

nir09: NIR 3 (band 9) - 60m

red: Red (band 4) - 10m

rededge1: Red edge 1 (band 5) - 20m

rededge2: Red edge 2 (band 6) - 20m

rededge3: Red edge 3 (band 7) - 20m

scl: Scene classification map (SCL)

swir16: SWIR 1 (band 11) - 20m

swir22: SWIR 2 (band 12) - 20m

thumbnail: Thumbnail image

tileinfo_metadata: None

visual: True color image

wvp: Water vapour (WVP)

aot-jp2: Aerosol optical thickness (AOT)

blue-jp2: Blue (band 2) - 10m

coastal-jp2: Coastal aerosol (band 1) - 60m

green-jp2: Green (band 3) - 10m

nir-jp2: NIR 1 (band 8) - 10m

nir08-jp2: NIR 2 (band 8A) - 20m

nir09-jp2: NIR 3 (band 9) - 60m

red-jp2: Red (band 4) - 10m

rededge1-jp2: Red edge 1 (band 5) - 20m

rededge2-jp2: Red edge 2 (band 6) - 20m

rededge3-jp2: Red edge 3 (band 7) - 20m

scl-jp2: Scene classification map (SCL)

swir16-jp2: SWIR 1 (band 11) - 20m

swir22-jp2: SWIR 2 (band 12) - 20m

visual-jp2: True color image

wvp-jp2: Water vapour (WVP)Among the other data files, assets include multiple raster data files (one per optical band, as acquired by the multi-spectral instrument), a thumbnail, a true-color image (“visual”), instrument metadata and scene-classification information (“SCL”). Let’s get the URL link to the thumbnail, which gives us a glimpse of the Sentinel-2 scene:

OUTPUT

https://sentinel-cogs.s3.us-west-2.amazonaws.com/sentinel-s2-l2a-cogs/35/S/NA/2023/7/S2A_35SNA_20230708_0_L2A/thumbnail.jpgThis can be used to download the corresponding file:

For comparison, we can check out the thumbnail of the most recent scene of the sequence considered (i.e. the first item in the item collection), which has been taken after the wildfires:

OUTPUT

https://sentinel-cogs.s3.us-west-2.amazonaws.com/sentinel-s2-l2a-cogs/35/S/NA/2023/8/S2A_35SNA_20230827_0_L2A/thumbnail.jpg

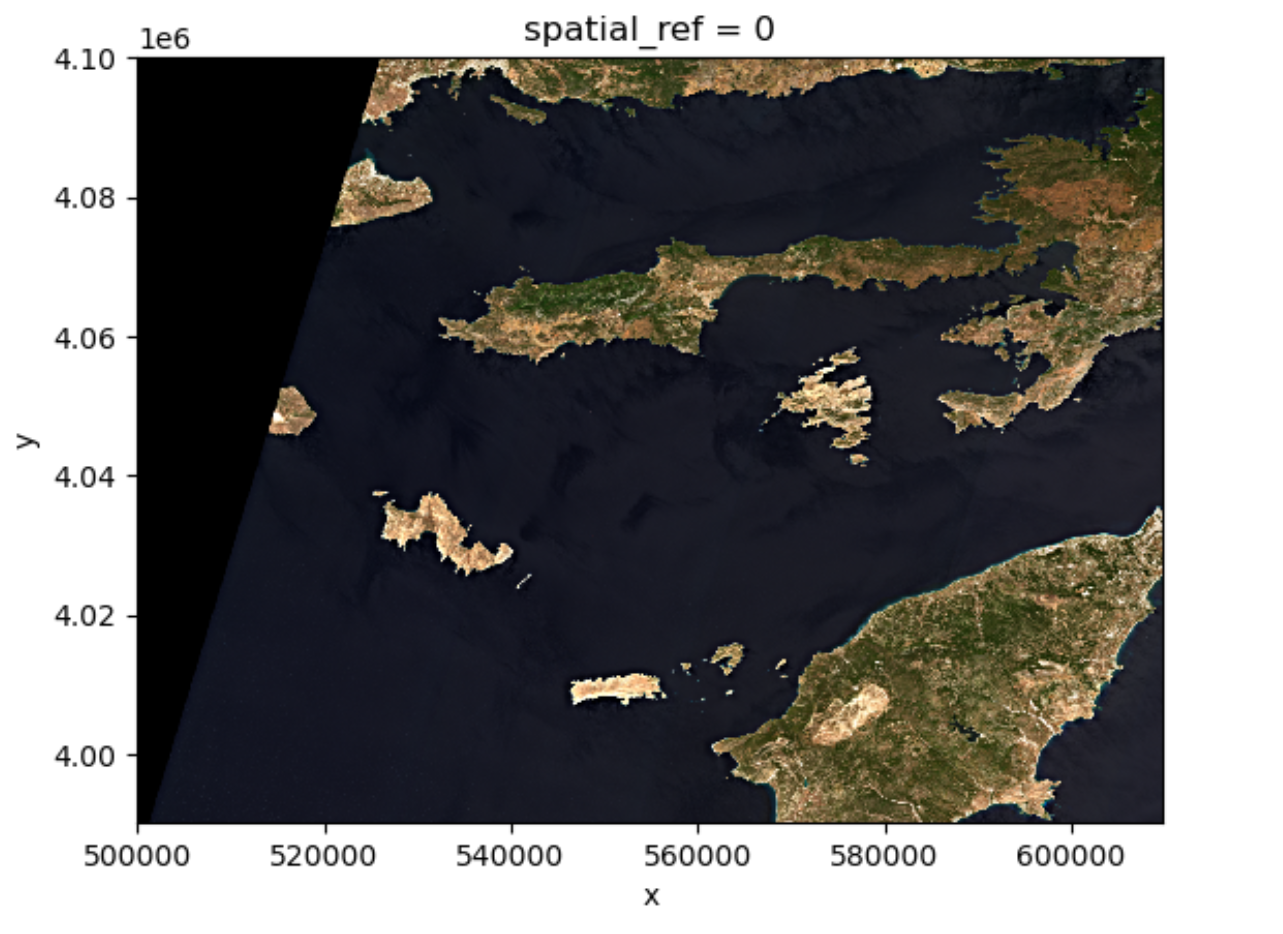

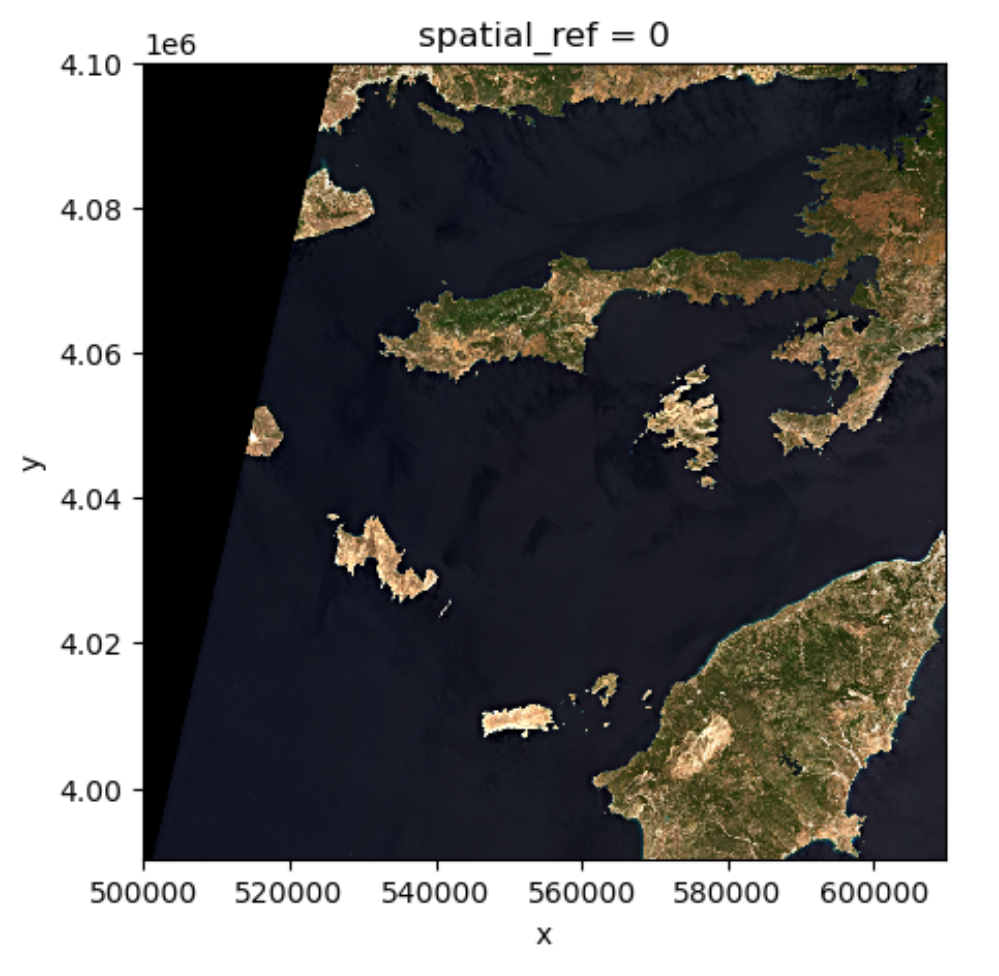

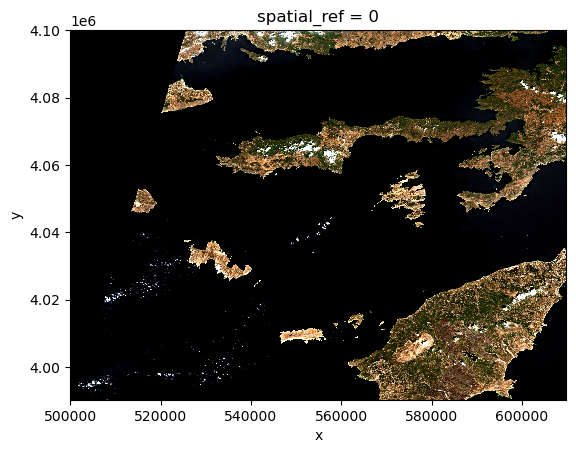

From the thumbnails alone we can already observe some dark spots on the island of Rhodes at the bottom right of the image!

In order to open the high-resolution satellite images and investigate

the scenes in more detail, we will be using the rioxarray

library. Note that this library can both work with local and remote

raster data. At this moment, we will only quickly look at the

functionality of this library. We will learn more about it in the next

episode.

Now let us focus on the red band by accessing the item

red from the assets dictionary and get the Hypertext

Reference (also known as URL) attribute using .href after

the item selection.

For now we are using rioxarray to open the raster file.

PYTHON

import rioxarray

red_href = assets["red"].href

red = rioxarray.open_rasterio(red_href)

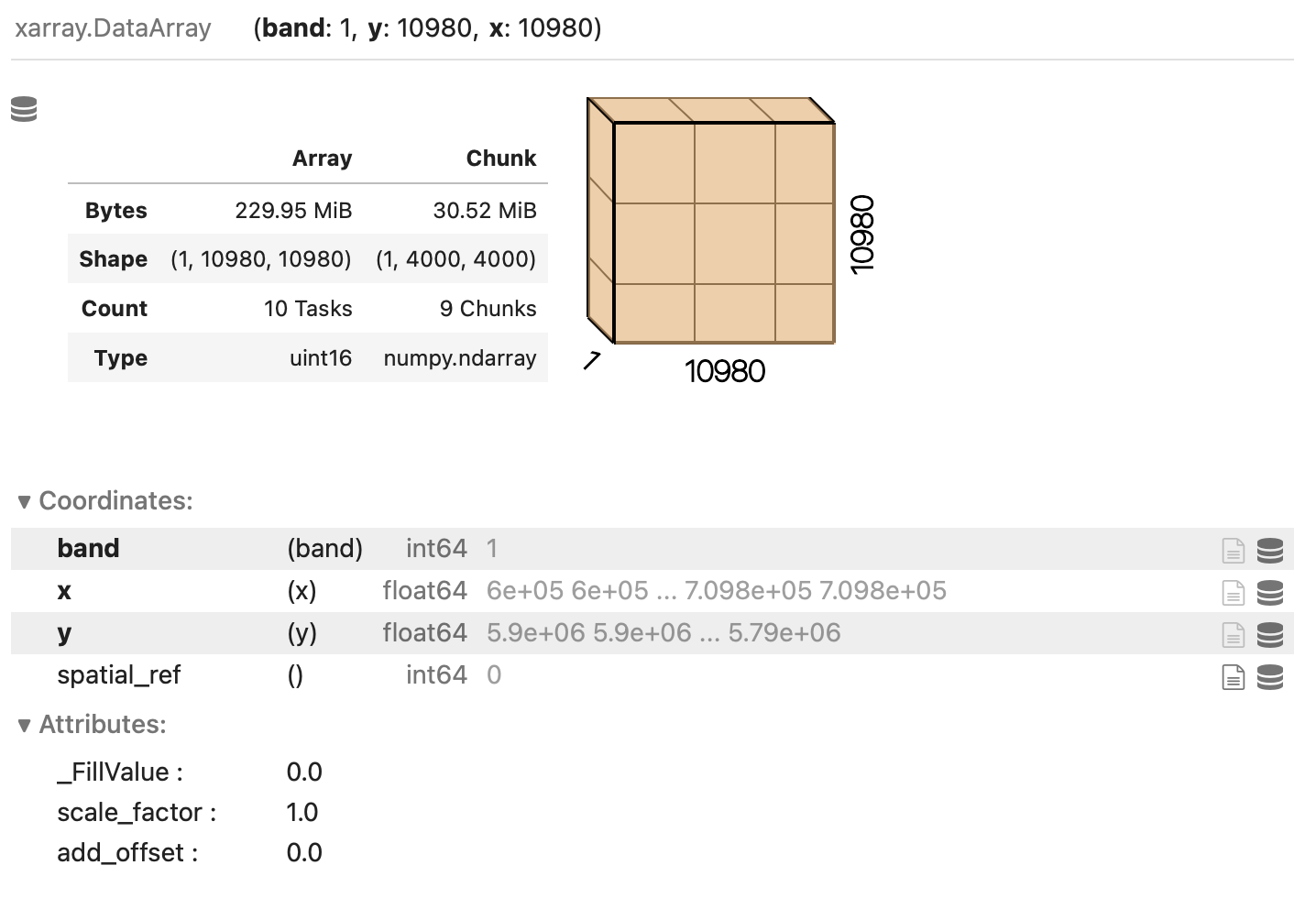

print(red)OUTPUT

<xarray.DataArray (band: 1, y: 10980, x: 10980)> Size: 241MB

[120560400 values with dtype=uint16]

Coordinates:

* band (band) int32 4B 1

* x (x) float64 88kB 5e+05 5e+05 5e+05 ... 6.098e+05 6.098e+05

* y (y) float64 88kB 4.1e+06 4.1e+06 4.1e+06 ... 3.99e+06 3.99e+06

spatial_ref int32 4B 0

Attributes:

AREA_OR_POINT: Area

OVR_RESAMPLING_ALG: AVERAGE

_FillValue: 0

scale_factor: 1.0

add_offset: 0.0Now we want to save the data to our local machine using the to_raster method:

That might take a while, given there are over 10000 x 10000 = a hundred million pixels in the 10-meter NIR band. But we can take a smaller subset before downloading it. Because the raster is a COG, we can download just what we need!

In order to do that, we are using rioxarray´s clip_box

with which you can set a bounding box defining the area you want.

Next, we save the subset using to_raster again.

The difference is 241 Megabytes for the full image vs less than 10 Megabytes for the subset.

Exercise: Downloading Landsat 8 Assets

In this exercise we put in practice all the skills we have learned in

this episode to retrieve images from a different mission: Landsat 8. In

particular, we browse images from the Harmonized Landsat

Sentinel-2 (HLS) project, which provides images from NASA’s Landsat

8 and ESA’s Sentinel-2 that have been made consistent with each other.

The HLS catalog is indexed in the NASA Common Metadata Repository (CMR)

and it can be accessed from the STAC API endpoint at the following URL:

https://cmr.earthdata.nasa.gov/stac/LPCLOUD.

- Using

pystac_client, search for all assets of the Landsat 8 collection (HLSL30.v2.0) from February to March 2021, intersecting the point with longitude/latitute coordinates (-73.97, 40.78) deg. - Visualize an item’s thumbnail (asset key

browse).

PYTHON

# connect to the STAC endpoint

cmr_api_url = "https://cmr.earthdata.nasa.gov/stac/LPCLOUD"

client = Client.open(cmr_api_url)

# setup search

search = client.search(

collections=["HLSL30.v2.0"],

intersects=Point(-73.97, 40.78),

datetime="2021-02-01/2021-03-30",

) # nasa cmr cloud cover filtering is currently broken: https://github.com/nasa/cmr-stac/issues/239

# retrieve search results

items = search.item_collection()

print(len(items))OUTPUT

5PYTHON

items_sorted = sorted(items, key=lambda x: x.properties["eo:cloud_cover"]) # sorting and then selecting by cloud cover

item = items_sorted[0]

print(item)OUTPUT

<Item id=HLS.L30.T18TWL.2021039T153324.v2.0>OUTPUT

'https://data.lpdaac.earthdatacloud.nasa.gov/lp-prod-public/HLSL30.020/HLS.L30.T18TWL.2021039T153324.v2.0/HLS.L30.T18TWL.039T153324.v2.0.jpg'

Public catalogs, protected data

Publicly accessible catalogs and STAC endpoints do not necessarily imply publicly accessible data. Data providers, in fact, may limit data access to specific infrastructures and/or require authentication. For instance, the NASA CMR STAC endpoint considered in the last exercise offers publicly accessible metadata for the HLS collection, but most of the linked assets are available only for registered users (the thumbnail is publicly accessible).

The authentication procedure for dataset with restricted access might differ depending on the data provider. For the NASA CMR, follow these steps in order to access data using Python:

- Create a NASA Earthdata login account here;

- Set up a netrc file with your credentials, e.g. by using this script;

- Define the following environment variables:

- Accessing satellite images via the providers’ API enables a more reliable and scalable data retrieval.

- STAC catalogs can be browsed and searched using the same tools and scripts.

-

rioxarrayallows you to open and download remote raster files.

Content from Read and visualize raster data

Last updated on 2024-06-30 | Edit this page

Overview

Questions

- How is a raster represented by rioxarray?

- How do I read and plot raster data in Python?

- How can I handle missing data?

Objectives

- Describe the fundamental attributes of a raster dataset.

- Explore raster attributes and metadata using Python.

- Read rasters into Python using the

rioxarraypackage. - Visualize single/multi-band raster data.

Raster Data

In the first episode of this course we provided an introduction on what Raster datasets are and how these divert from vector data. In this episode we will dive more into raster data and focus on how to work with them. We introduce fundamental principles, python packages, metadata and raster attributes for working with this type of data. In addition, we will explore how Python handles missing and bad data values.

The Python package we will use throughout this episode to handle

raster data is rioxarray.

This package is based on the popular rasterio

(which is build upon the GDAL library) for working with raster data and

xarray

for working with multi-dimensional arrays.

rioxarray extends xarray by providing

top-level functions like the open_rasterio

function to open raster datasets. Furthermore, it adds a set of methods

to the main objects of the xarray package like the Dataset

and the DataArray.

These methods are made available via the rio accessor and

become available from xarray objects after importing

rioxarray.

Exploring rioxarray and getting

help

Since a lot of the functions, methods and attributes from

rioxarray originate from other packages (mostly

rasterio), the documentation is in some cases limited and

requires a little puzzling. It is therefore recommended to foremost

focus at the notebook´s functionality to use tab completion and go

through the various functionalities. In addition, adding a question mark

? after every function or method offers the opportunity to

see the available options.

For instance if you want to understand the options for rioxarray´s

open_rasterio function:

Introduce the data

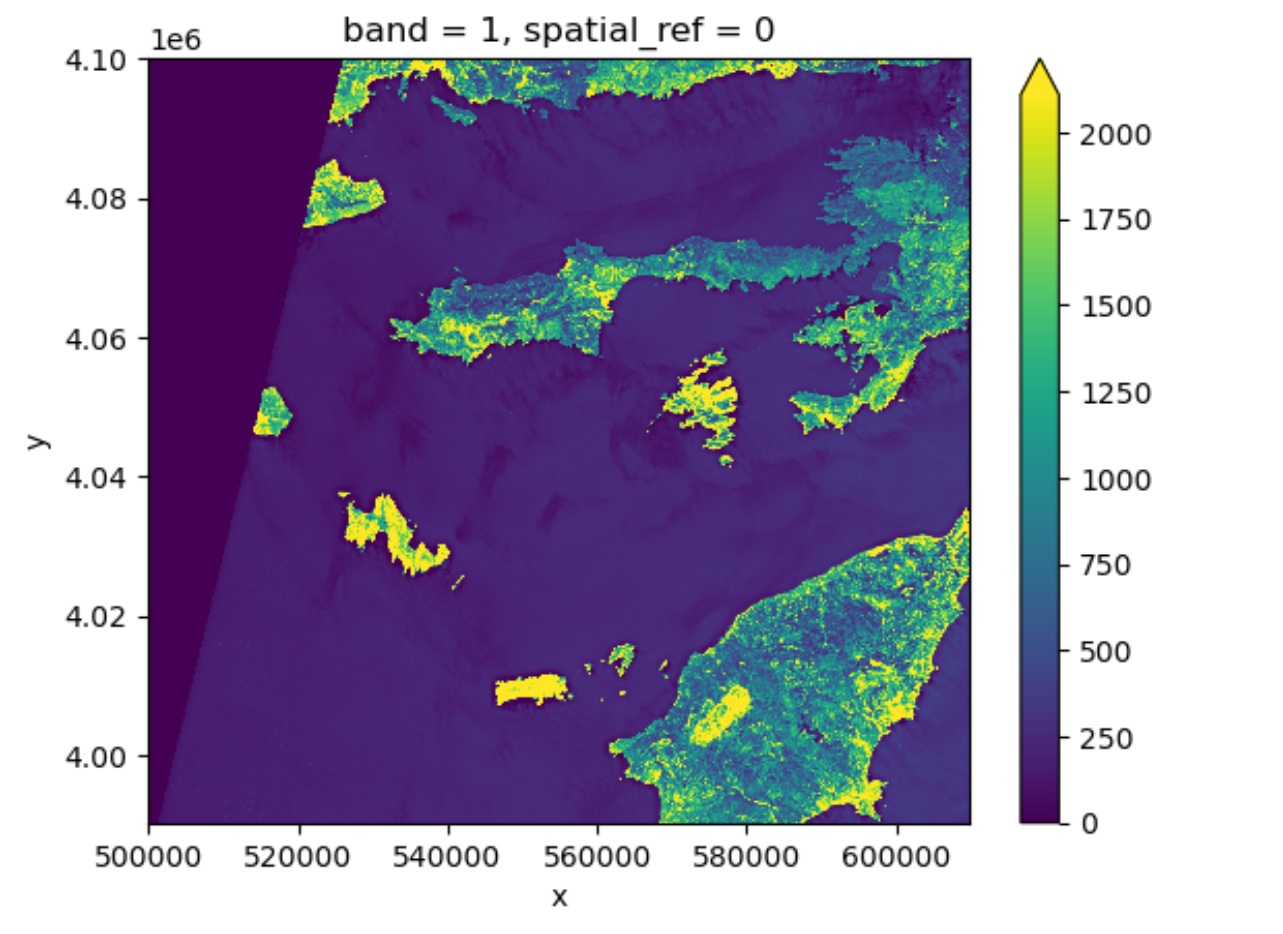

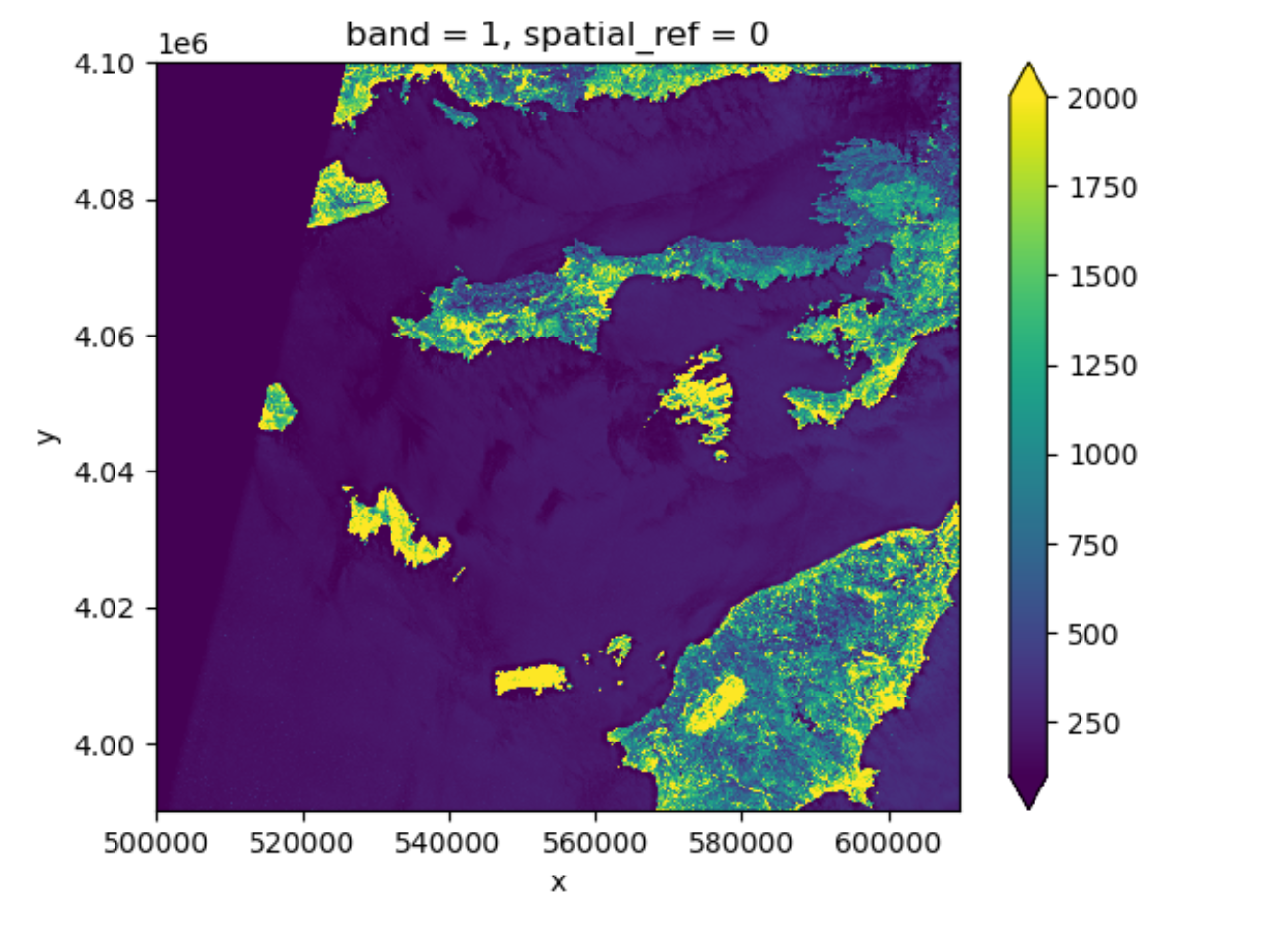

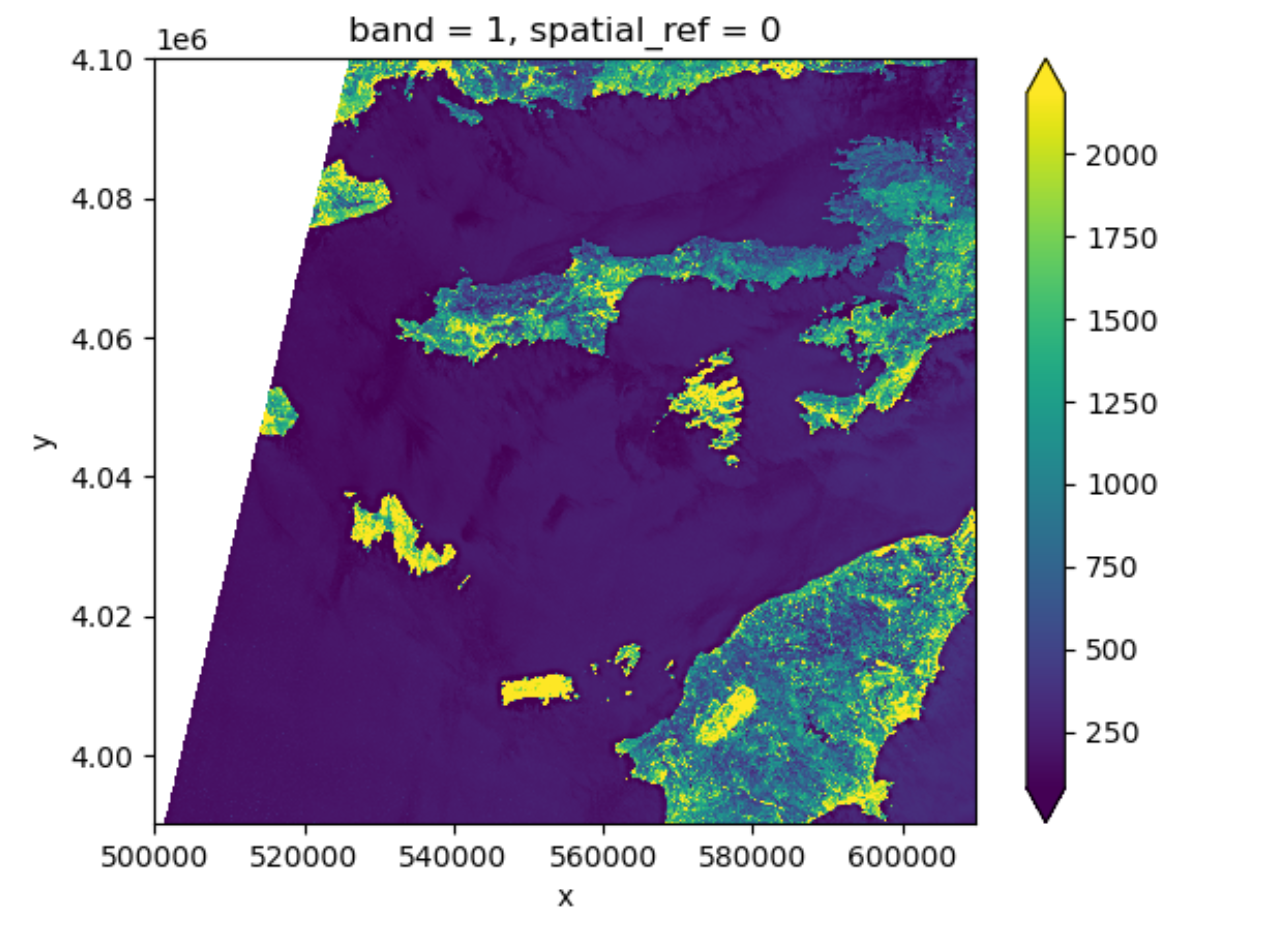

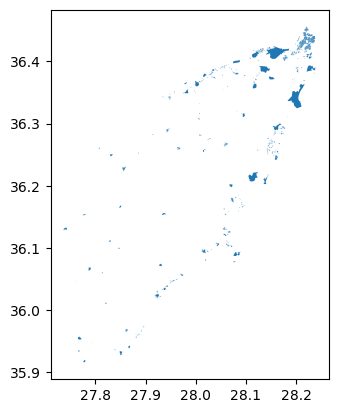

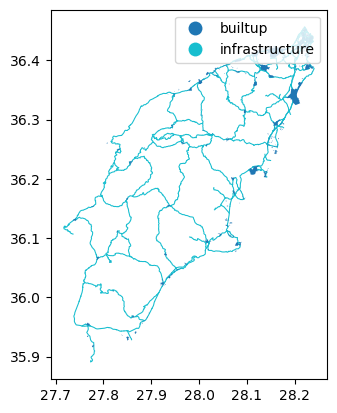

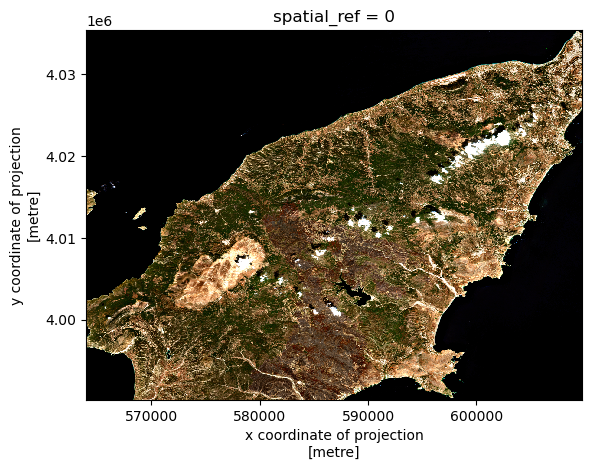

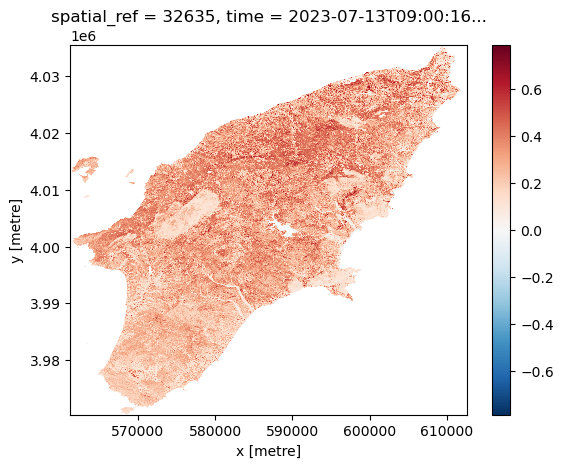

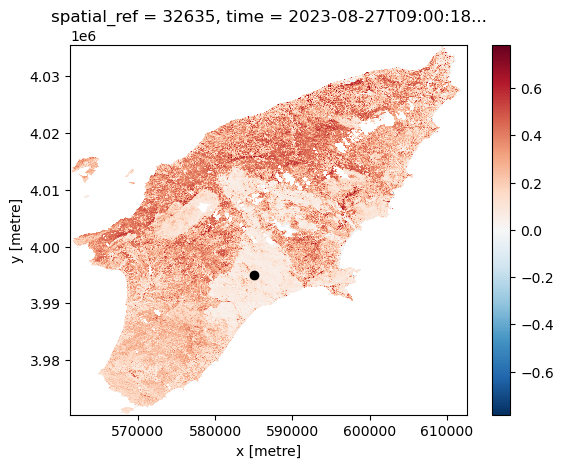

In this episode, we will use satellite images from the search that we have carried out in the episode: “Access satellite imagery using Python”. Briefly, we have searched for Sentinel-2 scenes of Rhodes from July 1st to August 31st 2023 that have less than 1% cloud coverage. The search resulted in 11 scenes. We focus here on the most recent scene (August 27th), since that would show the situation after the wildfire, and use this as an example to demonstrate raster data loading and visualization.

For your convenience, we included the scene of interest among the

datasets that you have already downloaded when following the setup instructions (the raster data files

should be in the data/sentinel2 directory). You should,

however, be able to download the same datasets “on-the-fly” using the

JSON metadata file that was created in the

previous episode (the file rhodes_sentinel-2.json).

If you choose to work with the provided data (which is advised in case you are working offline or have a slow/unstable network connection) you can skip the remaining part of the block and continue with the following section: Load a Raster and View Attributes.

If you want instead to experiment with downloading the data

on-the-fly, you need to load the file

rhodes_sentinel-2.json, which contains information on where

and how to access the target images from the remote repository:

The loaded item collection is equivalent to the one returned by

pystac_client when querying the API in the the episode: “Access satellite imagery using

Python”. You can thus perform the same actions on it, like accessing

the individual items using their index. Here we select the first item in

the collection, which is the most recent:

OUTPUT

<Item id=S2A_35SNA_20230827_0_L2A>In this episode we will consider the red band and the true color

image associated with this scene. They are labelled with the

red and visual keys, respectively, in the

asset dictionary. For each asset, we extract the URL / href

(Hypertext Reference) that point to the file, and store it in a variable

that we can use later on to access the data instead of the raster data

paths:

Load a Raster and View Attributes

To analyse the burned areas, we are interested in the red band of the

satellite scene. In episode 9 we will

further explain why the characteristics of that band are interesting in

relation to wildfires. For now, we can load the red band using the

function rioxarray.open_rasterio():

The first call to rioxarray.open_rasterio() opens the

file and it returns a xarray.DataArray object. The object

is stored in a variable, i.e. rhodes_red. Reading in the

data with xarray instead of rioxarray also

returns a xarray.DataArray, but the output will not contain

the geospatial metadata (such as projection information). You can use

numpy functions or built-in Python math operators on a

xarray.DataArray just like a numpy array. Calling the

variable name of the DataArray also prints out all of its

metadata information.

By printing the variable we can get a quick look at the shape and attributes of the data.

OUTPUT

<xarray.DataArray (band: 1, y: 10980, x: 10980)> Size: 241MB

[120560400 values with dtype=uint16]

Coordinates:

* band (band) int32 4B 1

* x (x) float64 88kB 5e+05 5e+05 5e+05 ... 6.098e+05 6.098e+05

* y (y) float64 88kB 4.1e+06 4.1e+06 4.1e+06 ... 3.99e+06 3.99e+06

spatial_ref int32 4B 0

Attributes:

AREA_OR_POINT: Area

OVR_RESAMPLING_ALG: AVERAGE

_FillValue: 0

scale_factor: 1.0

add_offset: 0.0The output tells us that we are looking at an

xarray.DataArray, with 1 band,

10980 rows, and 10980 columns. We can also see

the number of pixel values in the DataArray, and the type

of those pixel values, which is unsigned integer (or

uint16). The DataArray also stores different

values for the coordinates of the DataArray. When using

rioxarray, the term coordinates refers to spatial

coordinates like x and y but also the

band coordinate. Each of these sequences of values has its

own data type, like float64 for the spatial coordinates and

int64 for the band coordinate.

This DataArray object also has a couple of attributes

that are accessed like .rio.crs, .rio.nodata,

and .rio.bounds() (in jupyter you can browse through these

attributes by using tab for auto completion or have a look

at the documentation here),

which contains the metadata for the file we opened. Note that many of

the metadata are accessed as attributes without (), however

since bounds() is a method (i.e. a function in an object)

it requires these parentheses this is also the case for

.rio.resolution().

PYTHON

print(rhodes_red.rio.crs)

print(rhodes_red.rio.nodata)

print(rhodes_red.rio.bounds())

print(rhodes_red.rio.width)

print(rhodes_red.rio.height)

print(rhodes_red.rio.resolution())OUTPUT

EPSG:32635

0

(499980.0, 3990240.0, 609780.0, 4100040.0)

10980

10980

(10.0, -10.0)The Coordinate Reference System, or rhodes_red.rio.crs,

is reported as the string EPSG:32635. The

nodata value is encoded as 0 and the bounding box corners

of our raster are represented by the output of .bounds() as

a tuple (like a list but you can’t edit it). The height and

width match what we saw when we printed the DataArray, but

by using .rio.width and .rio.height we can

access these values if we need them in calculations.

Visualize a Raster

After viewing the attributes of our raster, we can examine the raw

values of the array with .values:

OUTPUT

array([[[ 0, 0, 0, ..., 8888, 9075, 8139],

[ 0, 0, 0, ..., 10444, 10358, 8669],

[ 0, 0, 0, ..., 10346, 10659, 9168],

...,

[ 0, 0, 0, ..., 4295, 4289, 4320],

[ 0, 0, 0, ..., 4291, 4269, 4179],

[ 0, 0, 0, ..., 3944, 3503, 3862]]], dtype=uint16)This can give us a quick view of the values of our array, but only at

the corners. Since our raster is loaded in python as a

DataArray type, we can plot this in one line similar to a

pandas DataFrame with DataArray.plot().

Notice that rioxarray helpfully allows us to plot this

raster with spatial coordinates on the x and y axis (this is not the

default in many cases with other functions or libraries). Nice plot!

However, it probably took a while for it to load therefore it would make

sense to resample it.

Resampling the raster image

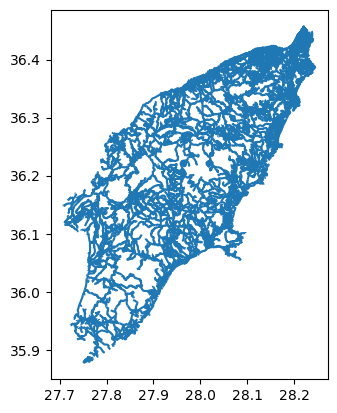

The red band image is available as a raster file with 10 m resolution, which makes it a relatively large file (few hundreds MBs). In order to keep calculations “manageable” (reasonable execution time and memory usage) we select here a lower resolution version of the image, taking advantage of the so-called “pyramidal” structure of cloud-optimized GeoTIFFs (COGs). COGs, in fact, typically include multiple lower-resolution versions of the original image, called “overviews”, in the same file. This allows us to avoid downloading high-resolution images when only quick previews are required.

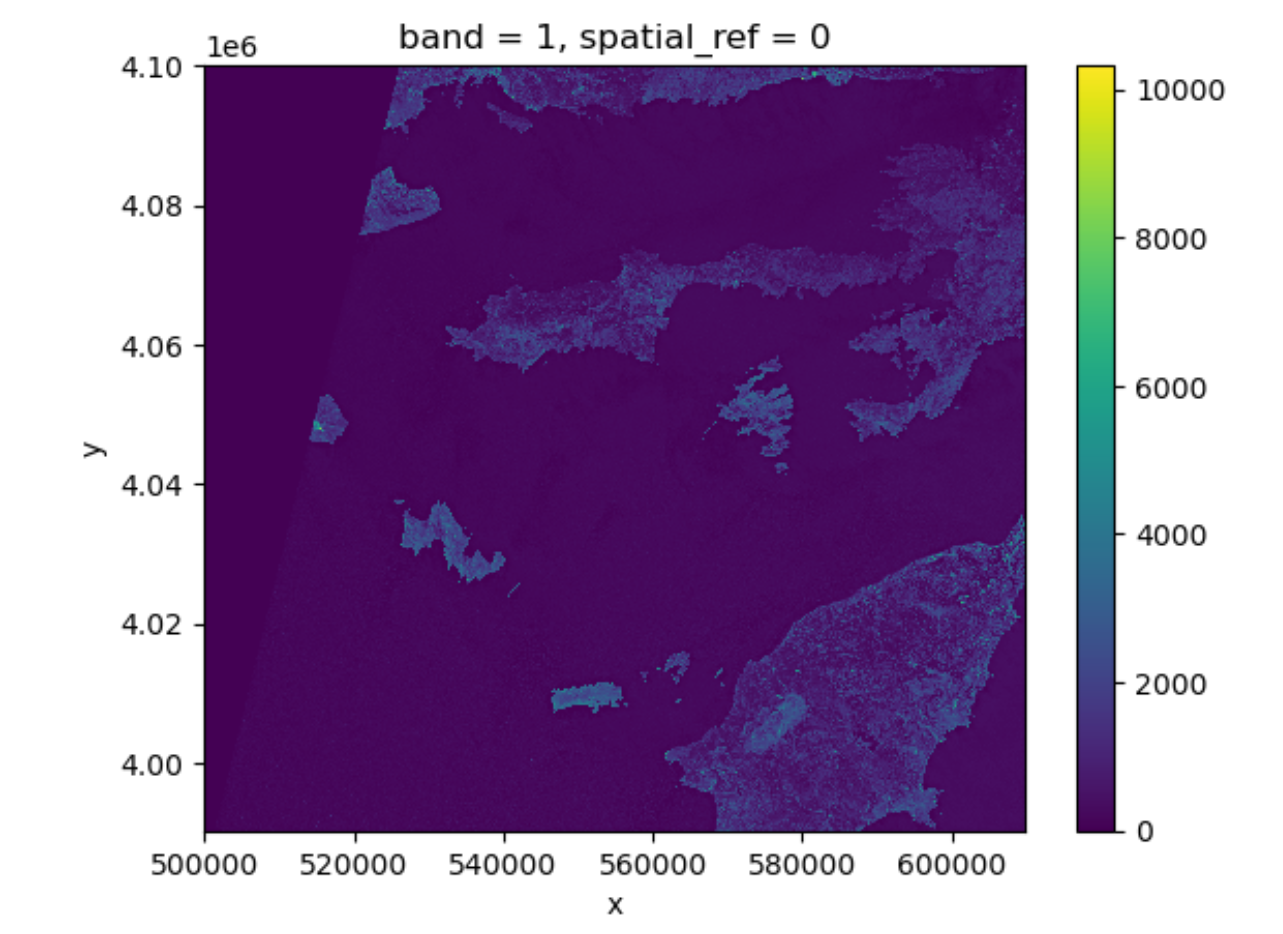

Overviews are often computed using powers of 2 as down-sampling (or zoom) factors. So, typically, the first level overview (index 0) corresponds to a zoom factor of 2, the second level overview (index 1) corresponds to a zoom factor of 4, and so on. Here, we open the third level overview (index 2, zoom factor 8) and check that the resolution is about 80 m:

PYTHON

import rioxarray

rhodes_red_80 = rioxarray.open_rasterio("data/sentinel2/red.tif", overview_level=2)

print(rhodes_red_80.rio.resolution())OUTPUT

(79.97086671522214, -79.97086671522214)Lets plot this one.

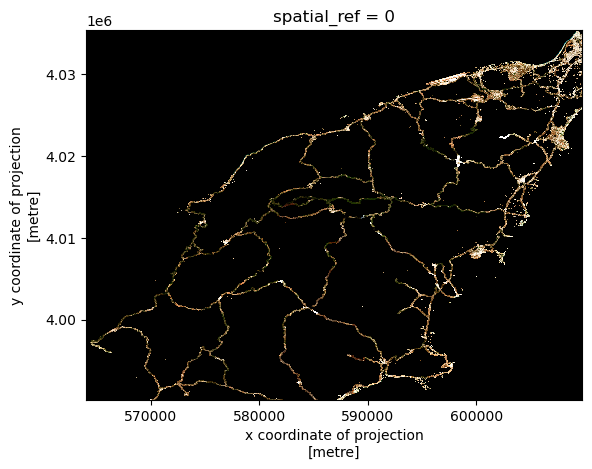

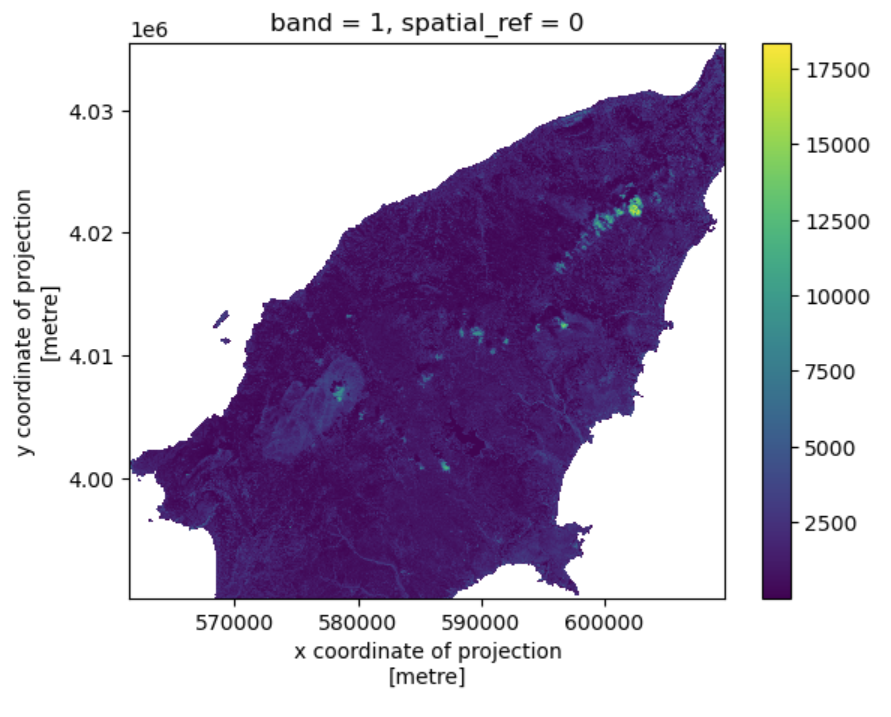

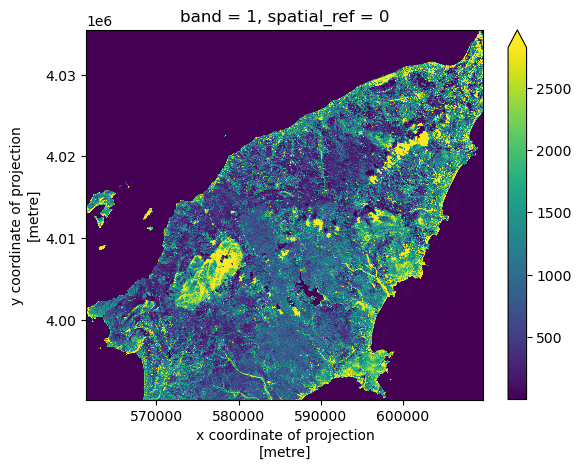

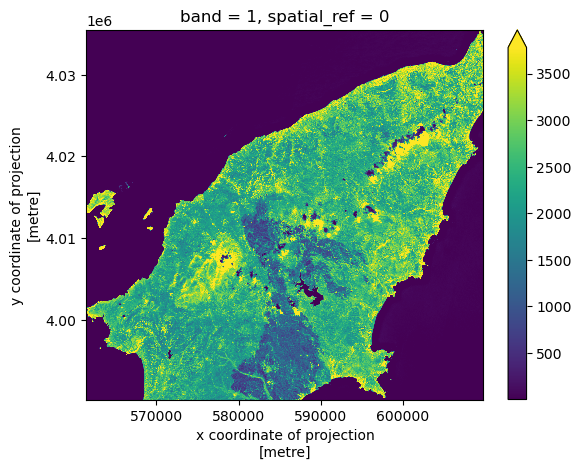

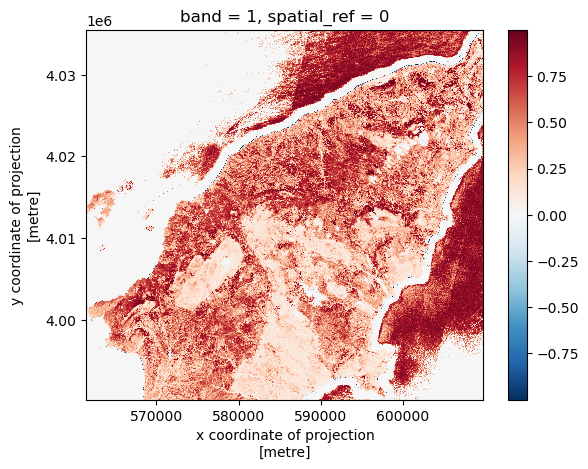

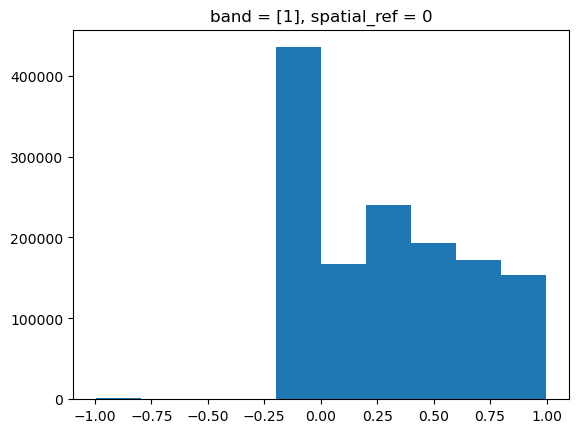

This plot shows the satellite measurement of the band

red for Rhodes before the wildfire. According to the Sentinel-2

documentaion, this is a band with the central wavelength of 665nm.

It has a spatial resolution of 10m. Note that the band=1 in

the image title refers to the ordering of all the bands in the

DataArray, not the Sentinel-2 band number 04

that we saw in the pystac search results.

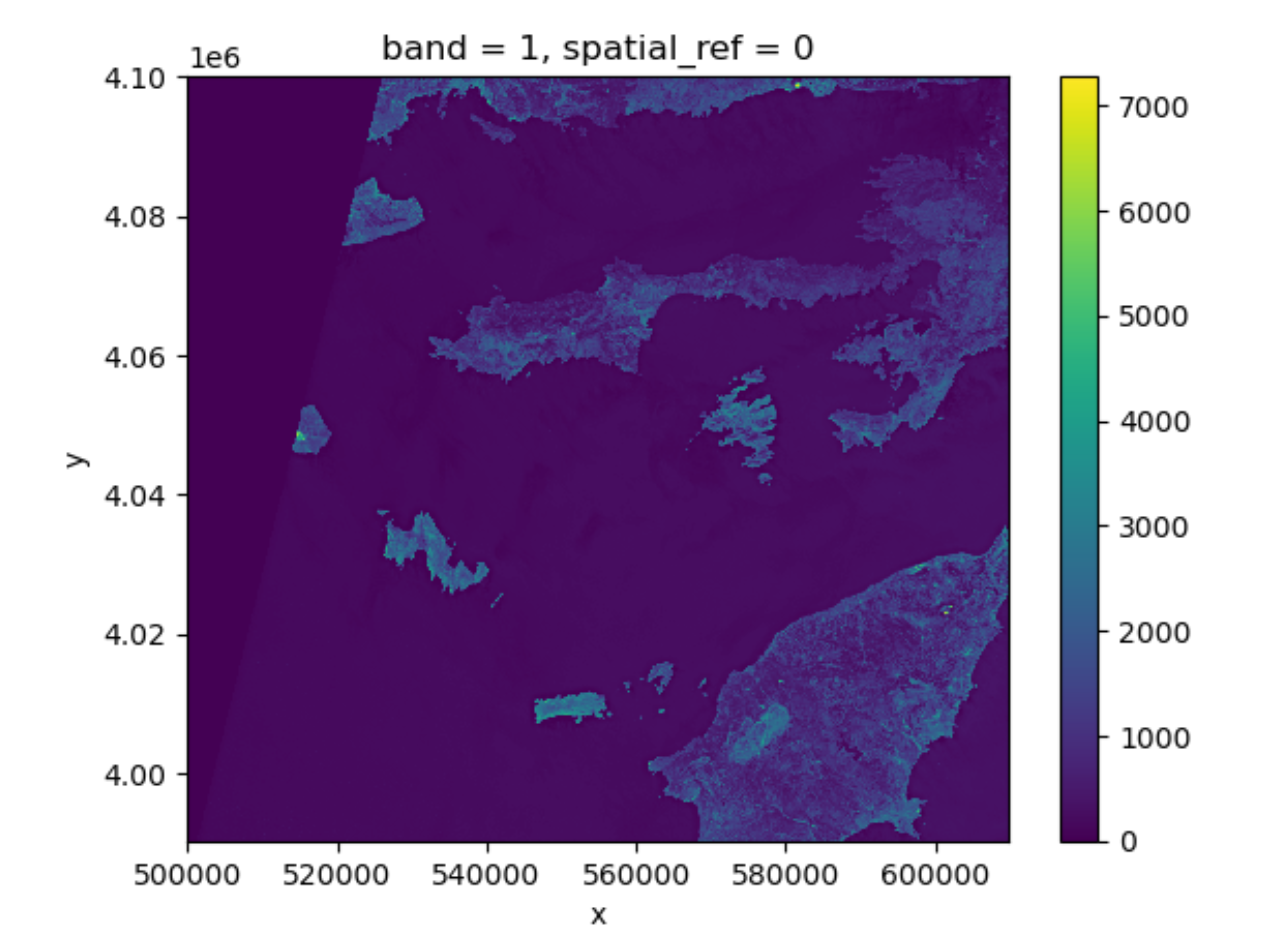

Tool Tip

The option robust=True always forces displaying values

between the 2nd and 98th percentile. Of course, this will not work for

every case.

Now the color limit is set in a way fitting most of the values in the image. We have a better view of the ground pixels.

For a customized displaying range, you can also manually specifying

the keywords vmin and vmax. For example

ploting between 100 and 2000:

More options can be consulted here.

You will notice that these parameters are part of the

imshow method from the plot function. Since plot originates

from matplotlib and is so widely used, your python environment helps you

to interpret the parameters without having to specify the method. It is

a service to help you, but can be confusing when teaching it. We will

explain more about this below.

View Raster Coordinate Reference System (CRS) in Python

Another information that we’re interested in is the CRS, and it can

be accessed with .rio.crs. We introduced the concept of a

CRS in an earlier episode. Now we will see how

features of the CRS appear in our data file and what meanings they have.

We can view the CRS string associated with our DataArray’s

rio object using the crs attribute.

OUTPUT

EPSG:32635To print the EPSG code number as an int, we use the

.to_epsg() method (which originally is part of rasterio to_epsg):

OUTPUT

32635EPSG codes are great for succinctly representing a particular

coordinate reference system. But what if we want to see more details

about the CRS, like the units? For that, we can use pyproj

, a library for representing and working with coordinate reference

systems.

OUTPUT

<Projected CRS: EPSG:32635>

Name: WGS 84 / UTM zone 35N

Axis Info [cartesian]:

- E[east]: Easting (metre)

- N[north]: Northing (metre)

Area of Use:

- name: Between 24°E and 30°E, northern hemisphere between equator and 84°N, onshore and offshore. Belarus. Bulgaria. Central African Republic. Democratic Republic of the Congo (Zaire). Egypt. Estonia. Finland. Greece. Latvia. Lesotho. Libya. Lithuania. Moldova. Norway. Poland. Romania. Russian Federation. Sudan. Svalbard. Türkiye (Turkey). Uganda. Ukraine.

- bounds: (24.0, 0.0, 30.0, 84.0)

Coordinate Operation:

- name: UTM zone 35N

- method: Transverse Mercator

Datum: World Geodetic System 1984 ensemble

- Ellipsoid: WGS 84

- Prime Meridian: GreenwichThe CRS class from the pyproj library

allows us to create a CRS object with methods and

attributes for accessing specific information about a CRS, or the

detailed summary shown above.

A particularly useful attribute is area_of_use,

which shows the geographic bounds that the CRS is intended to be

used.

OUTPUT

AreaOfUse(west=24.0, south=0.0, east=30.0, north=84.0, name='Between 24°E and 30°E, northern hemisphere between equator and 84°N, onshore and offshore. Belarus. Bulgaria. Central African Republic. Democratic Republic of the Congo (Zaire). Egypt. Estonia. Finland. Greece. Latvia. Lesotho. Libya. Lithuania. Moldova. Norway. Poland. Romania. Russian Federation. Sudan. Svalbard. Türkiye (Turkey). Uganda. Ukraine.')Exercise: find the axes units of the CRS

What units are our data in? See if you can find a method to examine

this information using help(crs) or

dir(crs)

crs.axis_info tells us that the CRS for our raster has

two axis and both are in meters. We could also get this information from

the attribute rhodes_red_80.rio.crs.linear_units.

Understanding pyproj CRS Summary

Let’s break down the pieces of the pyproj CRS summary.

The string contains all of the individual CRS elements that Python or

another GIS might need, separated into distinct sections, and datum.

OUTPUT

<Projected CRS: EPSG:32635>

Name: WGS 84 / UTM zone 35N

Axis Info [cartesian]:

- E[east]: Easting (metre)

- N[north]: Northing (metre)

Area of Use:

- name: Between 24°E and 30°E, northern hemisphere between equator and 84°N, onshore and offshore. Belarus. Bulgaria. Central African Republic. Democratic Republic of the Congo (Zaire). Egypt. Estonia. Finland. Greece. Latvia. Lesotho. Libya. Lithuania. Moldova. Norway. Poland. Romania. Russian Federation. Sudan. Svalbard. Türkiye (Turkey). Uganda. Ukraine.

- bounds: (24.0, 0.0, 30.0, 84.0)

Coordinate Operation:

- name: UTM zone 35N

- method: Transverse Mercator

Datum: World Geodetic System 1984 ensemble

- Ellipsoid: WGS 84

- Prime Meridian: Greenwich- Name of the projection is UTM zone 35N (UTM has 60 zones, each 6-degrees of longitude in width). The underlying datum is WGS84.

- Axis Info: the CRS shows a Cartesian system with two axes, easting and northing, in meter units.

-

Area of Use: the projection is used for a

particular range of longitudes

24°E to 30°Ein the northern hemisphere (0.0°N to 84.0°N) - Coordinate Operation: the operation to project the coordinates (if it is projected) onto a cartesian (x, y) plane. Transverse Mercator is accurate for areas with longitudinal widths of a few degrees, hence the distinct UTM zones.

-

Datum: Details about the datum, or the reference

point for coordinates.

WGS 84andNAD 1983are common datums.NAD 1983is set to be replaced in 2022.

Note that the zone is unique to the UTM projection. Not all CRSs will have a zone. Below is a simplified view of US UTM zones.

Calculate Raster Statistics

It is useful to know the minimum or maximum values of a raster

dataset. We can compute these and other descriptive statistics with

min, max, mean, and

std.

PYTHON

print(rhodes_red_80.min())

print(rhodes_red_80.max())

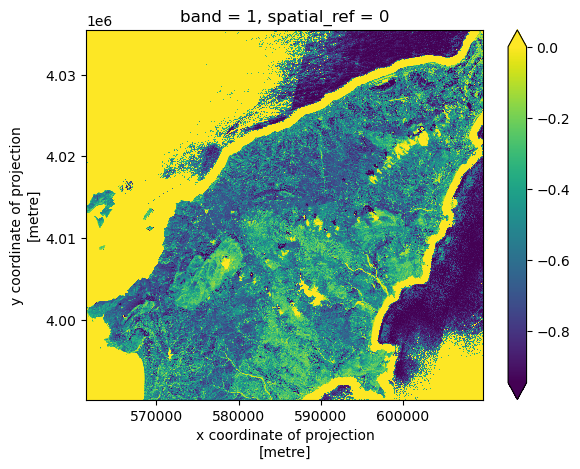

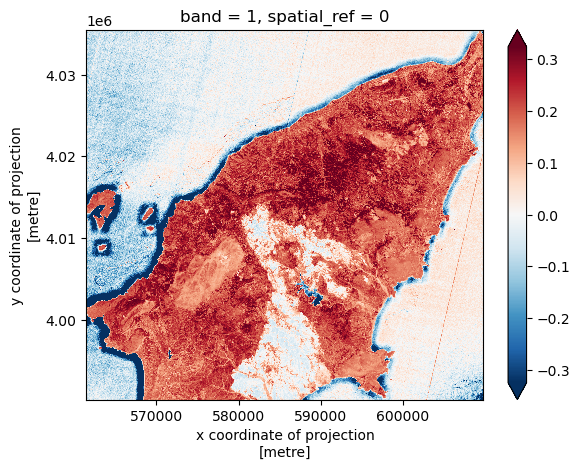

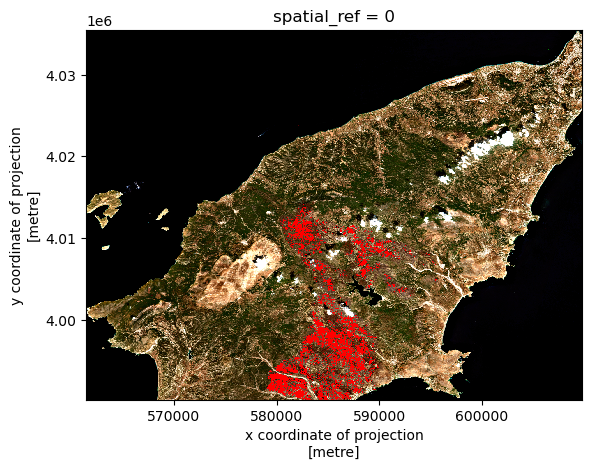

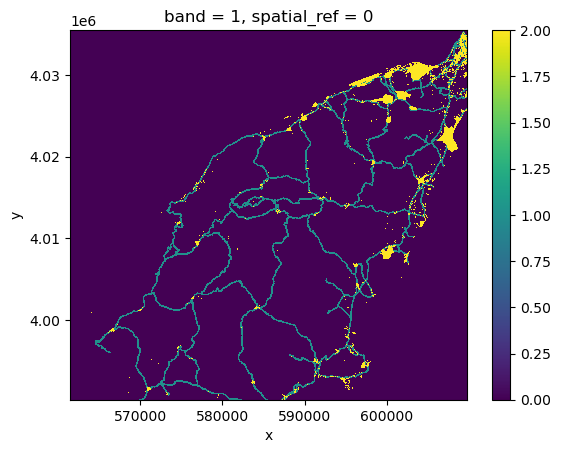

print(rhodes_red_80.mean())